[TOC]

## 概述

* 基于哈希表的实现的Map接口

* 无序

* 允许一个null键

* HashMap不是线程同步的。在多线程环境下使用HashMap,并且有写操作的话,必须要自己实现线程之间的同步,或者使用其他同步容器。

* iterator方法返回的迭代器是快速失败的。在创建迭代器之后的任何修改,除非是通过迭代器自身的remove方法对列表进行修改,否则迭代器都会抛出ConcurrentModificationException。

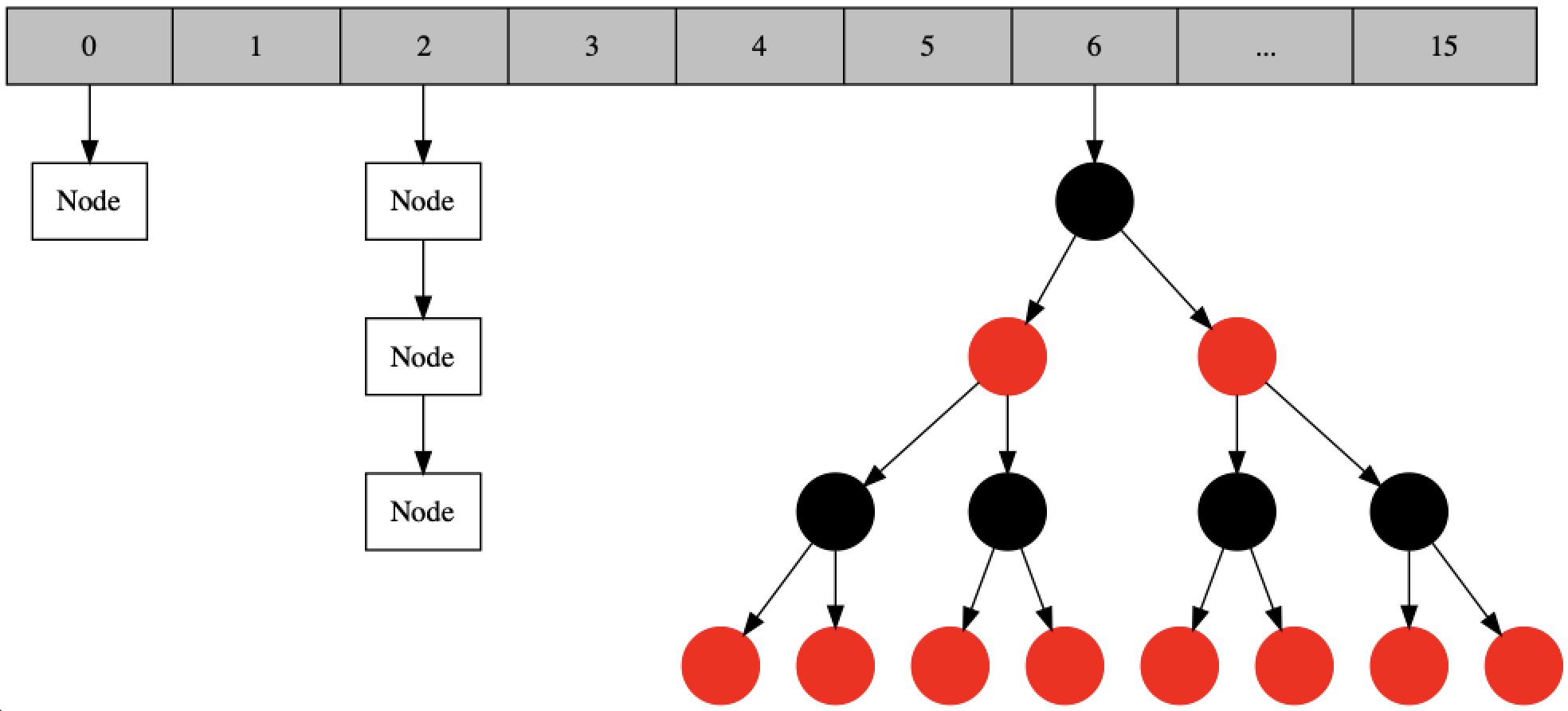

## HashMap的数据结构

jdk8中HashMap的实现是 数组 + 链表 + 红黑树。

1. 新key-value进来时,首先按key的hash值计算数组中存放的下标,并放入数组中;

2. 如果hash冲突,那么多个节点组成链表。

3. 当链表长度过长时,会转成红黑树。

### 链表Node

~~~

static class Node<K,V> implements Map.Entry<K,V> {

final int hash; // key的hash值

final K key;

V value;

Node<K,V> next; // 下一个节点

~~~

### 红黑树TreeNode

这里不展开讲红黑树了,有兴趣的可以看【数据结构-红黑树详解】。

~~~

static final class TreeNode<K,V> extends LinkedHashMap.Entry<K,V> {

TreeNode<K,V> parent; // 父节点

TreeNode<K,V> left; // 左子节点

TreeNode<K,V> right; // 右子节点

TreeNode<K,V> prev; // 前置节点,删除节点的时候解除链接

boolean red; // 节点颜色,红黑

~~~

## HashMap的实现

### 类定义

~~~

public class HashMap<K,V> extends AbstractMap<K,V>

implements Map<K,V>, Cloneable, Serializable {

~~~

### 默认配置

~~~

// Node数组的默认初始容量16

static final int DEFAULT_INITIAL_CAPACITY = 1 << 4;

// Node数组的最大容量

static final int MAXIMUM_CAPACITY = 1 << 30;

// 默认加载因子0.75

static final float DEFAULT_LOAD_FACTOR = 0.75f;

// 当链表长度大于此值时,转换为红黑树

static final int TREEIFY_THRESHOLD = 8;

static final int UNTREEIFY_THRESHOLD = 6;

// Node数组大小超过64才使用红黑树

static final int MIN_TREEIFY_CAPACITY = 64;

~~~

### 关键属性

~~~

// 哈希表

transient Node<K,V>[] table;

// MapEntry集合

transient Set<Map.Entry<K,V>> entrySet;

// 键值对数量

transient int size;

// HashMap修改次数,用于判断迭代器使用过程中是否快速失败。

transient int modCount;

// 数组大小 * 加载因子,对于给定初始容量或已知key大小的情况,第一次赋值是数组大小,后续变成数组大小 * 加载因子

int threshold;

// 加载因子

final float loadFactor;

~~~

### 构造函数

~~~

// 默认构造器,加载因子=0.75

public HashMap() {

this.loadFactor = DEFAULT_LOAD_FACTOR;

}

// 构造一个指定初始容量(真实容量会扩展到2^n次方)和加载因子的HashMap实例

public HashMap(int initialCapacity, float loadFactor) {

if (initialCapacity < 0)

throw new IllegalArgumentException("Illegal initial capacity: " +

initialCapacity);

if (initialCapacity > MAXIMUM_CAPACITY)

initialCapacity = MAXIMUM_CAPACITY;

if (loadFactor <= 0 || Float.isNaN(loadFactor))

throw new IllegalArgumentException("Illegal load factor: " +

loadFactor);

this.loadFactor = loadFactor;

this.threshold = tableSizeFor(initialCapacity);

}

// 将cap增加到2^m次方(不写n次方是为了与下面的变量n区分)

static final int tableSizeFor(int cap) {

int n = cap - 1; // 减1是针对cap=2^m的情况,最后的计算结果还是cap原值,不会增大

// 下面的操作是对n的所有二进制位都置为1,n = 2^m - 1。

n |= n >>> 1;

n |= n >>> 2;

n |= n >>> 4;

n |= n >>> 8;

n |= n >>> 16;

return (n < 0) ? 1 : (n >= MAXIMUM_CAPACITY) ? MAXIMUM_CAPACITY : n + 1; // n + 1 = 2^m

}

// 构造一个指定初始容量(真实容量会扩展到2^n次方)的HashMap实例

public HashMap(int initialCapacity) {

this(initialCapacity, DEFAULT_LOAD_FACTOR);

}

// 用已有Map实例构造一个HashMap实例,导入所有键值对

public HashMap(Map<? extends K, ? extends V> m) {

this.loadFactor = DEFAULT_LOAD_FACTOR;

putMapEntries(m, false); // 把m的所有键值对导入新HashMap中,具体实现放到后面讲

}

~~~

### 关键操作

#### 添加key-value

~~~

// 新增键值对,若已存在则覆盖。

public V put(K key, V value) {

return putVal(hash(key), key, value, false, true);

}

final V putVal(int hash, K key, V value, boolean onlyIfAbsent, boolean evict) {

Node<K,V>[] tab; Node<K,V> p; int n, i;

// 如果数组为空,先扩容,resize后面讲

if ((tab = table) == null || (n = tab.length) == 0)

n = (tab = resize()).length;

// 计算key在数组中的存放位置i,i跟数组大小和 key 的 hash 值有关

// 如果i位置上还没有数据,就把key和value存放到这个位置上。

if ((p = tab[i = (n - 1) & hash]) == null)

tab[i] = newNode(hash, key, value, null);

else {

Node<K,V> e; K k;

if (p.hash == hash && ((k = p.key) == key || (key != null && key.equals(k)))) // key已存在,并且是链表头结点或红黑树根节点

e = p;

else if (p instanceof TreeNode)

// 将key-value节点添加到红黑树,若已存在,直接返回已存在的key-value节点

e = ((TreeNode<K,V>)p).putTreeVal(this, tab, hash, key, value);

else {

// 遍历链表,查找key对应的node

for (int binCount = 0; ; ++binCount) {

if ((e = p.next) == null) {

// 遍历结束,key不存在,把key-value节点添加到链表中

p.next = newNode(hash, key, value, null);

if (binCount >= TREEIFY_THRESHOLD - 1) // 链表长度超过8,试图转成红黑树

treeifyBin(tab, hash);

break;

}

if (e.hash == hash && ((k = e.key) == key || (key != null && key.equals(k)))) // 找到key,遍历结束

break;

p = e;

}

}

// 对于已存在的key,更新value,并返回旧value

if (e != null) {

V oldValue = e.value;

if (!onlyIfAbsent || oldValue == null) // 判断是否覆盖

e.value = value;

afterNodeAccess(e); // 回调函数,在LinkedHashMap中使用

return oldValue;

}

}

++modCount; // 修改次数加1

// 如果key数量超过threshold,则扩容

if (++size > threshold)

resize();

afterNodeInsertion(evict); // 回调函数,在LinkedHashMap中使用

return null;

}

final void treeifyBin(Node<K,V>[] tab, int hash) {

int n, index; Node<K,V> e;

if (tab == null || (n = tab.length) < MIN_TREEIFY_CAPACITY)

resize(); // 数组大小不超过MIN_TREEIFY_CAPACITY,不使用红黑树,先扩容一次

else if ((e = tab[index = (n - 1) & hash]) != null) {

TreeNode<K,V> hd = null, tl = null;

do {

TreeNode<K,V> p = replacementTreeNode(e, null); // 链表Node实例都转成TreeNode实例,只是类型转换,还不是树

if (tl == null)

hd = p;

else {

p.prev = tl;

tl.next = p;

}

tl = p;

} while ((e = e.next) != null);

if ((tab[index] = hd) != null)

hd.treeify(tab); // 转成红黑树结构

}

}

~~~

#### 批量添加key-value

~~~

public void putAll(Map<? extends K, ? extends V> m) {

putMapEntries(m, true);

}

final void putMapEntries(Map<? extends K, ? extends V> m, boolean evict) {

int s = m.size();

if (s > 0) {

// 如果数组为空,根据key数量计算数组大小。第一次put的时候才真正的创建数组

if (table == null) {

float ft = ((float)s / loadFactor) + 1.0F; // 为什么+1?从结果来看,对于s / loadFactor = 2^n次方的情况,+1后再调用tableSizeFor的计算结果是2^(n+1)次方,就是提前扩容了。

int t = ((ft < (float)MAXIMUM_CAPACITY) ?

(int)ft : MAXIMUM_CAPACITY);

if (t > threshold)

threshold = tableSizeFor(t); // 增加到2^n次方

}

else if (s > threshold)

resize();

// 循环导入key-value

for (Map.Entry<? extends K, ? extends V> e : m.entrySet()) {

K key = e.getKey();

V value = e.getValue();

putVal(hash(key), key, value, false, evict);

}

}

}

~~~

#### 扩容

~~~

final Node<K,V>[] resize() {

Node<K,V>[] oldTab = table;

int oldCap = (oldTab == null) ? 0 : oldTab.length;

int oldThr = threshold;

int newCap, newThr = 0;

if (oldCap > 0) {

if (oldCap >= MAXIMUM_CAPACITY) { // 已达最大容量,threshold设置为Integer.MAX_VALUE,不会再触发扩容

threshold = Integer.MAX_VALUE;

return oldTab;

}

// 数组大小扩容两倍

else if ((newCap = oldCap << 1) < MAXIMUM_CAPACITY & oldCap >= DEFAULT_INITIAL_CAPACITY)

newThr = oldThr << 1; // 扩容阈值也增加2倍。oldCap < DEFAULT_INITIAL_CAPACITY时,threshold值是DEFAULT_INITIAL_CAPACITY,不能直接2倍,需要按公式 数组大小 * 加载因子 重新计算

}

else if (oldThr > 0) // initial capacity was placed in threshold

newCap = oldThr;

else { // zero initial threshold signifies using defaults

newCap = DEFAULT_INITIAL_CAPACITY;

newThr = (int)(DEFAULT_LOAD_FACTOR * DEFAULT_INITIAL_CAPACITY);

}

if (newThr == 0) {

float ft = (float)newCap * loadFactor;

newThr = (newCap < MAXIMUM_CAPACITY && ft < (float)MAXIMUM_CAPACITY ?

(int)ft : Integer.MAX_VALUE);

}

threshold = newThr;

@SuppressWarnings({"rawtypes","unchecked"})

Node<K,V>[] newTab = (Node<K,V>[])new Node[newCap]; // 新建数组

table = newTab;

if (oldTab != null) {

// 遍历旧数组,把原来的数据复制到新数组

for (int j = 0; j < oldCap; ++j) {

Node<K,V> e;

if ((e = oldTab[j]) != null) {

oldTab[j] = null;

// 如果是单个节点,计算新下标,并放入新数组中

if (e.next == null)

newTab[e.hash & (newCap - 1)] = e;

// 如果是红黑树,调用split

else if (e instanceof TreeNode)

((TreeNode<K,V>)e).split(this, newTab, j, oldCap);

// 如果是链表,按e.hash & oldCap == 0拆分成两个链表,一个存放到原来的位置j,另一个存放到位置j+oldCap

else { // preserve order

Node<K,V> loHead = null, loTail = null;

Node<K,V> hiHead = null, hiTail = null;

Node<K,V> next;

do {

next = e.next;

if ((e.hash & oldCap) == 0) {

if (loTail == null)

loHead = e;

else

loTail.next = e;

loTail = e;

}

else {

if (hiTail == null)

hiHead = e;

else

hiTail.next = e;

hiTail = e;

}

} while ((e = next) != null);

if (loTail != null) {

loTail.next = null;

newTab[j] = loHead;

}

if (hiTail != null) {

hiTail.next = null;

newTab[j + oldCap] = hiHead;

}

}

}

}

}

return newTab;

}

~~~

#### 删除key

~~~

public V remove(Object key) {

Node<K,V> e;

return (e = removeNode(hash(key), key, null, false, true)) == null ?

null : e.value;

}

final Node<K,V> removeNode(int hash, Object key, Object value, boolean matchValue, boolean movable) {

Node<K,V>[] tab; Node<K,V> p; int n, index;

if ((tab = table) != null && (n = tab.length) > 0 &&

(p = tab[index = (n - 1) & hash]) != null) {

Node<K,V> node = null, e; K k; V v;

if (p.hash == hash && ((k = p.key) == key || (key != null && key.equals(k)))) // key节点是链表头部或树的根节点

node = p;

else if ((e = p.next) != null) {

if (p instanceof TreeNode) // 如果是红黑树,在树上查找key节点

node = ((TreeNode<K,V>)p).getTreeNode(hash, key);

else { // 如果是链表,遍历链表查找key节点

do {

if (e.hash == hash &&

((k = e.key) == key ||

(key != null && key.equals(k)))) {

node = e;

break;

}

p = e;

} while ((e = e.next) != null);

}

}

if (node != null && (!matchValue || (v = node.value) == value || (value != null && value.equals(v)))) {

// 如果是红黑树,从树上删除 key节点

if (node instanceof TreeNode)

((TreeNode<K,V>)node).removeTreeNode(this, tab, movable);

// 如果是链表,从链表上删除 key节点

else if (node == p)

tab[index] = node.next;

else

p.next = node.next;

++modCount;

--size;

afterNodeRemoval(node); // 回调函数,在LinkedHashMap中使用

return node;

}

}

return null;

}

~~~

#### 获取key-value

~~~

public V get(Object key) {

Node<K,V> e;

return (e = getNode(hash(key), key)) == null ? null : e.value;

}

final Node<K,V> getNode(int hash, Object key) {

Node<K,V>[] tab; Node<K,V> first, e; int n; K k;

if ((tab = table) != null && (n = tab.length) > 0 && (first = tab[(n - 1) & hash]) != null) {

if (first.hash == hash && ((k = first.key) == key || (key != null && key.equals(k)))) // key节点是链表头部或树的根节点

return first;

if ((e = first.next) != null) {

if (first instanceof TreeNode) // 如果是红黑树,在树上查找key节点

return ((TreeNode<K,V>)first).getTreeNode(hash, key);

do { // 如果是链表,遍历链表查找key节点

if (e.hash == hash && ((k = e.key) == key || (key != null && key.equals(k))))

return e;

} while ((e = e.next) != null);

}

}

return null;

}

~~~

### 迭代器Iterator

~~~

abstract class HashIterator {

Node<K,V> next; // 下一个返回的节点

Node<K,V> current; // 当前节点

int expectedModCount; // 用于快速失败

int index; // 当前位置

HashIterator() {

expectedModCount = modCount; // 初始化为map当前修改次数

Node<K,V>[] t = table;

current = next = null;

index = 0;

// 遍历数组与链表,找到第一个不为空的节点

if (t != null && size > 0) {

do {} while (index < t.length && (next = t[index++]) == null);

}

}

public final boolean hasNext() {

return next != null;

}

final Node<K,V> nextNode() {

Node<K,V>[] t;

Node<K,V> e = next;

if (modCount != expectedModCount)

throw new ConcurrentModificationException();

if (e == null)

throw new NoSuchElementException();

// 从当前位置开始,遍历数组与链表,找到下一个不为空的节点

if ((next = (current = e).next) == null && (t = table) != null) {

do {} while (index < t.length && (next = t[index++]) == null);

}

return e;

}

public final void remove() {

Node<K,V> p = current;

if (p == null)

throw new IllegalStateException();

if (modCount != expectedModCount)

throw new ConcurrentModificationException();

current = null;

K key = p.key;

// 删除当前节点

removeNode(hash(key), key, null, false, false);

expectedModCount = modCount; // 更新expectedModCount,通过迭代器删除,不会快速失败

}

}

final class KeyIterator extends HashIterator

implements Iterator<K> {

public final K next() { return nextNode().key; }

}

final class ValueIterator extends HashIterator

implements Iterator<V> {

public final V next() { return nextNode().value; }

}

final class EntryIterator extends HashIterator

implements Iterator<Map.Entry<K,V>> {

public final Map.Entry<K,V> next() { return nextNode(); }

}

~~~

### 并发迭代器Spliterator

~~~

static class HashMapSpliterator<K,V> {

final HashMap<K,V> map;

Node<K,V> current; // 当前节点

int index; // 当前位置,trySplit、tryAdvance时变化

int fence; // 初始值-1

int est; // 预估数量

int expectedModCount; // 用于快速失败

HashMapSpliterator(HashMap<K,V> m, int origin,

int fence, int est,

int expectedModCount) {

this.map = m;

this.index = origin;

this.fence = fence;

this.est = est;

this.expectedModCount = expectedModCount;

}

final int getFence() { // initialize fence and size on first use

int hi;

if ((hi = fence) < 0) {

HashMap<K,V> m = map;

est = m.size; // 预估数量=map元素数量

expectedModCount = m.modCount;

Node<K,V>[] tab = m.table;

hi = fence = (tab == null) ? 0 : tab.length; // fence设置成数组长度

}

return hi;

}

public final long estimateSize() {

getFence(); // force init

return (long) est;

}

}

static final class KeySpliterator<K,V>

extends HashMapSpliterator<K,V>

implements Spliterator<K> {

KeySpliterator(HashMap<K,V> m, int origin, int fence, int est,

int expectedModCount) {

super(m, origin, fence, est, expectedModCount);

}

// 每次将数组切一半出去,新建一个KeySpliterator实例。

public KeySpliterator<K,V> trySplit() {

int hi = getFence(), lo = index, mid = (lo + hi) >>> 1;

return (lo >= mid || current != null) ? null :

new KeySpliterator<>(map, lo, index = mid, est >>>= 1, // 当前位置index变成剩余一半的开始位置,预估大小减半

expectedModCount);

}

public void forEachRemaining(Consumer<? super K> action) {

int i, hi, mc;

if (action == null)

throw new NullPointerException();

HashMap<K,V> m = map;

Node<K,V>[] tab = m.table;

if ((hi = fence) < 0) {

mc = expectedModCount = m.modCount;

hi = fence = (tab == null) ? 0 : tab.length;

}

else

mc = expectedModCount;

// 遍历所有节点,执行action操作

if (tab != null && tab.length >= hi &&

(i = index) >= 0 && (i < (index = hi) || current != null)) {

Node<K,V> p = current;

current = null;

do {

if (p == null)

p = tab[i++];

else {

action.accept(p.key);

p = p.next;

}

} while (p != null || i < hi);

if (m.modCount != mc)

throw new ConcurrentModificationException();

}

}

// 尝试对下一个节点执行action操作

public boolean tryAdvance(Consumer<? super K> action) {

int hi;

if (action == null)

throw new NullPointerException();

Node<K,V>[] tab = map.table;

if (tab != null && tab.length >= (hi = getFence()) && index >= 0) {

while (current != null || index < hi) {

if (current == null)

current = tab[index++];

else {

K k = current.key;

current = current.next;

action.accept(k);

if (map.modCount != expectedModCount)

throw new ConcurrentModificationException();

return true;

}

}

}

return false;

}

public int characteristics() {

return (fence < 0 || est == map.size ? Spliterator.SIZED : 0) |

Spliterator.DISTINCT;

}

}

~~~

ValueSpliterator 与 EntrySpliterator 的实现与KeySpliterator类似,这里不再赘述。

- 概要

- JDK源码解读系列

- 容器类

- ArrayList源码解读

- LinkedList源码解读

- HashSet源码解读

- LinkedHashSet源码解读

- TreeSet源码解读

- HashMap源码解读

- LinkedHashMap源码解读

- 数据结构

- 红黑树详解

- 设计模式

- 设计模式的7个基本原则

- 单一职责原则

- 开闭原则

- 里氏替换原则

- 依赖倒置原则

- 接口隔离原则

- 迪米特法则

- 合成复用原则

- GoF的23种设计模式

- 创建型模式

- 单例模式

- 原型模式

- 工厂方法模式

- 抽象工厂模式

- 建造者模式

- 结构型模式

- 代理模式

- 适配器模式

- 装饰器模式

- 行为型模式

- 模板方法模式

- 策略模式

- 命令模式

- 责任链模式

- 观察者模式

- Mybatis技术内幕

- 第一章 Mybatis整体架构

- 第二章 基础支持层

- 2.1 解析器模块

- 2.2 反射工具箱

- 2.3 类型转换

- 2.4 日志模块

- 2.5 资源加载

- 2.6 数据源DataSource

- 2.7 事务Trasaction

- 2.8 Binding模块

- 2.9 缓存模块

- 第三章 核心处理层

- 3.1 MyBatis初始化

- 3.2 SqlNode&SqlSource

- 3.3 ResultSetHandler

- 3.4 KeyGenerator

- 3.5 StatementHandler

- 3.6 Executor

- 3.7 SqlSession