k8s的GUI资源管理插件-仪表盘

部署kubernetes-dashboard

1、镜像准备

```

docker pull k8scn/kubernetes-dashboard-amd64:v1.8.3

docker images | grep dashboard

docker tag fcac9aa03fd6 harbor.od.com/public/dashboard:v1.8.3

docker push harbor.od.com/public/dashboard:v1.8.3

```

准备资源清单

https://github.com/kubernetes/kubernetes/blob/master/cluster/addons/dashboard/dashboard.yaml

创建目录

```

mkdir /data/k8s-yaml/dashboard

cd /data/k8s-yaml/dashboard/

```

[root@hdss7-200 dashboard]# cat rbac.yaml

```

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard-admin

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-admin

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard-admin

namespace: kube-system

```

[root@hdss7-200 dashboard]# cat dp.yaml

```

apiVersion: apps/v1

kind: Deployment

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

priorityClassName: system-cluster-critical

containers:

- name: kubernetes-dashboard

image: harbor.od.com/public/dashboard:v1.10.1

resources:

limits:

cpu: 100m

memory: 300Mi

requests:

cpu: 50m

memory: 100Mi

ports:

- containerPort: 8443

protocol: TCP

args:

# PLATFORM-SPECIFIC ARGS HERE

- --auto-generate-certificates

volumeMounts:

- name: tmp-volume

mountPath: /tmp

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard-admin

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

```

[root@hdss7-200 dashboard]# cat svc.yaml

```

apiVersion: v1

kind: Service

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

k8s-app: kubernetes-dashboard

ports:

- port: 443

targetPort: 8443

```

[root@hdss7-200 dashboard]# cat ingress.yaml

```

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kubernetes-dashboard

namespace: kube-system

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: dashboard.od.com

http:

paths:

- backend:

serviceName: kubernetes-dashboard

servicePort: 443

```

创建资源:任意node

```

kubectl create -f http://k8s-yaml.od.com/dashboard/rbac.yaml

kubectl create -f http://k8s-yaml.od.com/dashboard/dp.yaml

kubectl create -f http://k8s-yaml.od.com/dashboard/svc.yaml

kubectl create -f http://k8s-yaml.od.com/dashboard/ingress.yaml

```

添加域名解析:

```

vi /var/named/od.com.zone

dashboard A 10.4.7.10

systemctl restart named

```

通过浏览器访问:

http://dashboard.od.com

但是,我们可以看到我们安装1.8版本的dashboard,默认是可以跳过验证的:

很显然,跳过登录,是不科学的,因为我们在配置dashboard的rbac权限时,绑定的角色是system:admin,这个是集群管理员的角色,权限很大,所以这里我们把版本换成1.10以上版本

下载1.10.1版本:

```

docker pull loveone/kubernetes-dashboard-amd64:v1.10.1

docker tag f9aed6605b81 harbor.od.com/public/dashboard:v1.10.1

docker push harbor.od.com/public/dashboard:v1.10.1

```

修改dp.yaml重新应用,我直接用edit修改了,没有使用apply

kubectl edit deploy kubernetes-dashboard -n kube-system

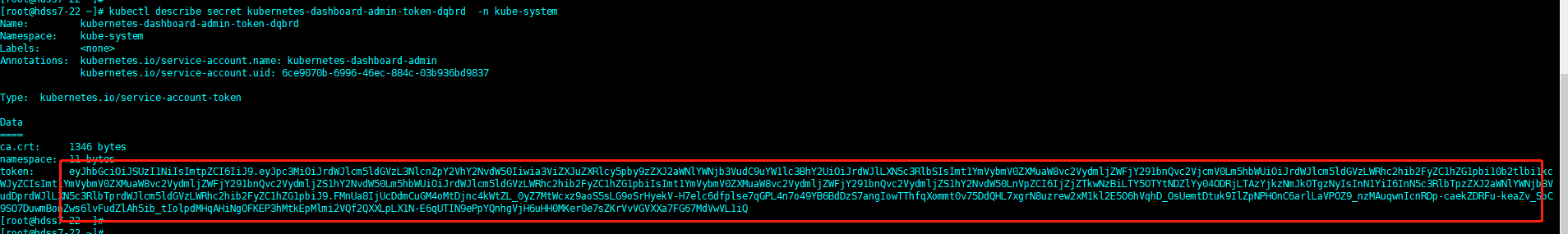

可以看到这里原来的skip跳过已经没有了,我们如果想登陆,必须输入token,那我们如何获取token呢:

kubectl get secret -n kube-system

kubectl describe secret kubernetes-dashboard-admin-token-pg77n -n kube-system

这样我们就拿到了token,接下来我们试试能不能登录:

我们发现我们还是无法登录,原因是必须使用https登录,接下来我们需要申请证书:

接下来我们申请证书:

依然使用cfssl来申请证书:hdss7-200

cd /opt/certs/

vi dashboard-csr.json

```

{

"CN": "*.od.com",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

```

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server dashboard-csr.json |cfssl-json -bare dashboard

然后拷贝到我们nginx的服务器上:7-11 7-12 都需要

cd /etc/nginx/

mkdir certs

cd certs

scp hdss7-200:/opt/cert/dash* ./

cd /etc/nginx/conf.d/

vi dashboard.od.com.conf

```

server {

listen 80;

server_name dashboard.od.com;

rewrite ^(.*)$ https://${server_name}$1 permanent;

}

server {

listen 443 ssl;

server_name dashboard.od.com;

ssl_certificate "certs/dashboard.pem";

ssl_certificate_key "certs/dashboard-key.pem";

ssl_session_cache shared:SSL:1m;

ssl_session_timeout 10m;

ssl_ciphers HIGH:!aNULL:!MD5;

ssl_prefer_server_ciphers on;

location / {

proxy_pass http://default_backend_traefik;

proxy_set_header Host $http_host;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

}

}

```

nginx -t

nginx -s reload

dashboard图形监控 heapster部署

```

docker pull quay.io/bitnami/heapster:1.5.4

docker tag c359b95ad38b harbor.od.com/public/heapster:v1.5.4

docker push harbor.od.com/public/heapster:v1.5.4

```

[root@hdss7-200 heapster]# cat rbac.yaml

```

apiVersion: v1

kind: ServiceAccount

metadata:

name: heapster

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: heapster

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:heapster

subjects:

- kind: ServiceAccount

name: heapster

namespace: kube-system

```

[root@hdss7-200 heapster]# cat dp.yaml

```

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: heapster

namespace: kube-system

spec:

replicas: 1

template:

metadata:

labels:

task: monitoring

k8s-app: heapster

spec:

serviceAccountName: heapster

containers:

- name: heapster

image: harbor.od.com/public/heapster:v1.5.4

imagePullPolicy: IfNotPresent

command:

- /opt/bitnami/heapster/bin/heapster

- --source=kubernetes:https://kubernetes.default

```

[root@hdss7-200 heapster]# cat svc.yaml

```

apiVersion: v1

kind: Service

metadata:

labels:

task: monitoring

# For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon, you should comment out this line.

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: Heapster

name: heapster

namespace: kube-system

spec:

ports:

- port: 80

targetPort: 8082

selector:

k8s-app: heapster

```

- 空白目录

- k8s

- k8s介绍和架构图

- 硬件环境和准备工作

- bind9-DNS服务部署

- 私有仓库harbor部署

- k8s-etcd部署

- api-server部署

- 配置apiserver L4代理

- controller-manager部署

- kube-scheduler部署

- node节点kubelet 部署

- node节点kube-proxy部署

- cfss-certinfo使用

- k8s网络-Flannel部署

- k8s网络优化

- CoreDNS部署

- k8s服务暴露之ingress

- 常用命令记录

- k8s-部署dashboard服务

- K8S平滑升级

- k8s服务交付

- k8s交付dubbo服务

- 服务架构图

- zookeeper服务部署

- Jenkins服务+共享存储nfs部署

- 安装配置maven和java运行时环境的底包镜像

- 使用blue ocean流水线构建镜像

- K8S生态--交付prometheus监控

- 介绍

- 部署4个exporter

- 部署prometheus server

- 部署grafana

- alert告警部署

- 日志收集ELK

- 制作Tomcat镜像

- 部署ElasticSearch

- 部署kafka和kafka-manager

- filebeat镜像制作

- 部署logstash

- 部署Kibana

- Apollo交付到Kubernetes集群

- Apollo简介

- 交付apollo-configservice

- 交付apollo-adminservice

- 交付apollo-portal

- k8s-CICD

- 集群整体架构

- 集群安装

- harbor仓库和nfs部署

- nginx-ingress-controller服务部署

- gitlab服务部署

- gitlab服务优化

- gitlab-runner部署

- dind服务部署

- CICD自动化服务devops演示

- k8s上服务日志收集