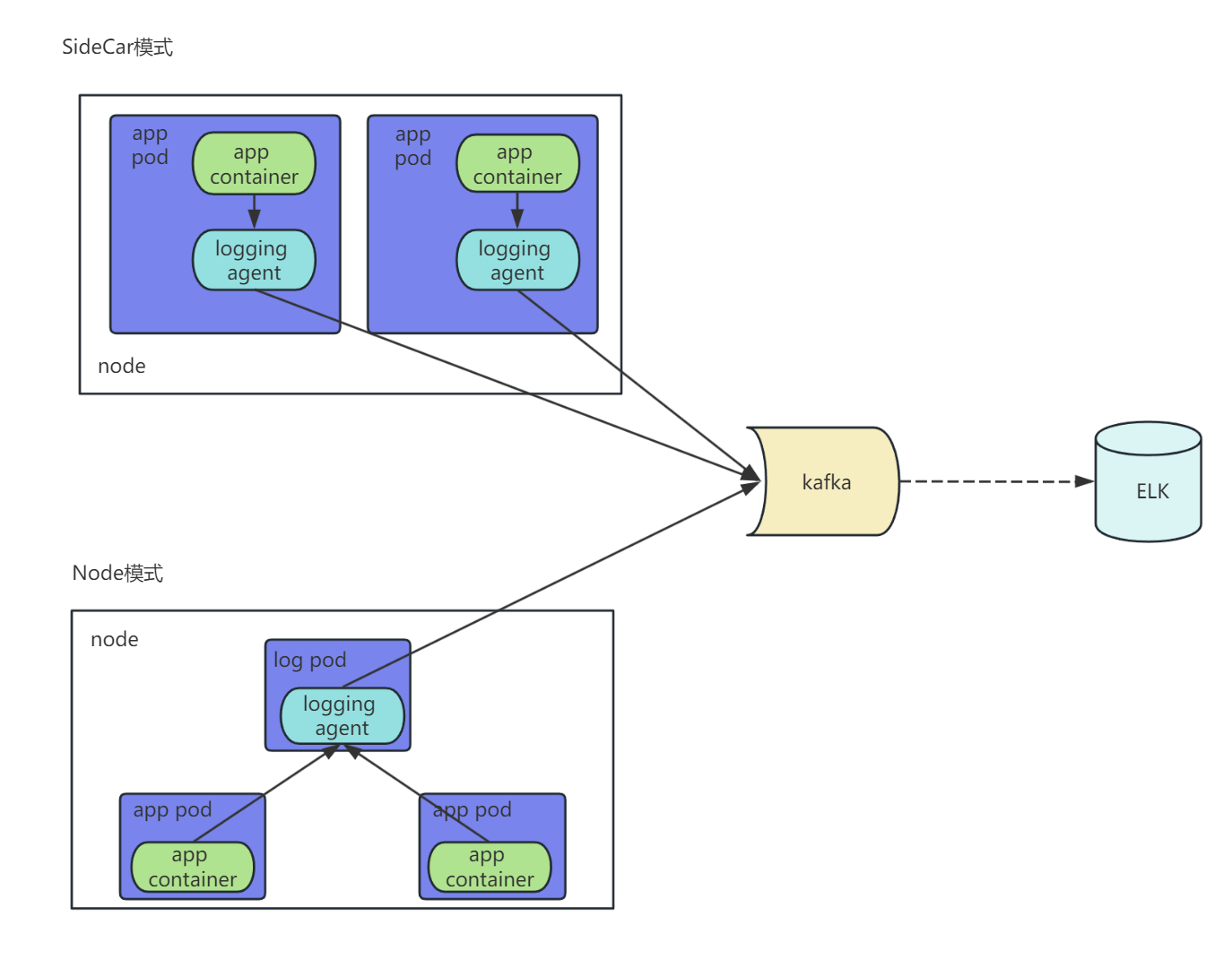

### k8s服务日志收集,一般有两周模式:

**SideCar模式(也是边车模式)**

每个Pod都附带一个logging容器用于本Pod内容器的日志收集。

缺点:

1、资源占用多,无论是CPU还是MEM

2、占用后端过多连接数,集群规模越大引起潜在的问题越大。

**Node模式**

每个Node上只会部署一个logging容器用于本Node所有容器的日志收集。

优点:

1、资源占用少,集群规模越大优势越明显

2、社区推荐模式

缺点:

需要更智能的logging agent配合。

下面是两种模式的架构图:

这里主要讲的是Node模式:

kafka+ELK部署参考这篇博客:https://blog.51cto.com/qwer/2607037

接下来部署logging agent服务:

log-pilot-kafka.yaml

```

apiVersion: v1

kind: ConfigMap

metadata:

name: log-pilot2-configuration

#namespace: ns-elastic

data:

logging_output: "kafka"

kafka_brokers: "10.4.7.104:9092"

kafka_version: "0.10.0"

# configure all valid topics in kafka

# when disable auto-create topic

kafka_topics: "tomcat-syslog,tomcat-access"

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: log-pilot2

#namespace: ns-elastic

labels:

k8s-app: log-pilot2

spec:

selector:

matchLabels:

k8s-app: log-pilot2

updateStrategy:

type: RollingUpdate

template:

metadata:

labels:

k8s-app: log-pilot2

spec:

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

containers:

- name: log-pilot2

#

# wget https://github.com/AliyunContainerService/log-pilot/archive/v0.9.7.zip

# unzip log-pilot-0.9.7.zip

# vim ./log-pilot-0.9.7/assets/filebeat/config.filebeat

# ...

# output.kafka:

# hosts: [$KAFKA_BROKERS]

# topic: '%{[topic]}'

# codec.format:

# string: '%{[message]}'

# ...

image: registry.cn-hangzhou.aliyuncs.com/acs/log-pilot:0.9.7-filebeat

env:

- name: "LOGGING_OUTPUT"

valueFrom:

configMapKeyRef:

name: log-pilot2-configuration

key: logging_output

- name: "KAFKA_BROKERS"

valueFrom:

configMapKeyRef:

name: log-pilot2-configuration

key: kafka_brokers

- name: "KAFKA_VERSION"

valueFrom:

configMapKeyRef:

name: log-pilot2-configuration

key: kafka_version

- name: "NODE_NAME"

valueFrom:

fieldRef:

fieldPath: spec.nodeName

volumeMounts:

- name: sock

mountPath: /var/run/docker.sock

- name: logs

mountPath: /var/log/filebeat

- name: state

mountPath: /var/lib/filebeat

- name: root

mountPath: /host

readOnly: true

- name: localtime

mountPath: /etc/localtime

# configure all valid topics in kafka

# when disable auto-create topic

- name: config-volume

mountPath: /etc/filebeat/config

securityContext:

capabilities:

add:

- SYS_ADMIN

terminationGracePeriodSeconds: 30

volumes:

- name: sock

hostPath:

path: /var/run/docker.sock

type: Socket

- name: logs

hostPath:

path: /var/log/filebeat

type: DirectoryOrCreate

- name: state

hostPath:

path: /var/lib/filebeat

type: DirectoryOrCreate

- name: root

hostPath:

path: /

type: Directory

- name: localtime

hostPath:

path: /etc/localtime

type: File

# kubelet sync period

- name: config-volume

configMap:

name: log-pilot2-configuration

items:

- key: kafka_topics

path: kafka_topics

```

再部署一个tomcat测试是否正常

```

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: tomcat

name: tomcat

spec:

replicas: 1

selector:

matchLabels:

app: tomcat

template:

metadata:

labels:

app: tomcat

spec:

tolerations:

- key: "node-role.kubernetes.io/master"

effect: "NoSchedule"

containers:

- name: tomcat

image: "tomcat:7.0"

env: # 注意点一,添加相应的环境变量(下面收集了两块日志1、stdout 2、/usr/local/tomcat/logs/catalina.*.log)

- name: aliyun_logs_tomcat-syslog # 如日志发送到es,那index名称为 tomcat-syslog

value: "stdout"

- name: aliyun_logs_tomcat-access # 如日志发送到es,那index名称为 tomcat-access

value: "/usr/local/tomcat/logs/catalina.*.log"

volumeMounts: # 注意点二,对pod内要收集的业务日志目录需要进行共享,可以收集多个目录下的日志文件

- name: tomcat-log

mountPath: /usr/local/tomcat/logs

volumes:

- name: tomcat-log

emptyDir: {}

```

部署完成kafka查看命令:

查看kafka中生成的topics:

/opt/kafka/bin/kafka-topics.sh --zookeeper 10.4.7.104:2181 --list

消费topics中的数据:

/opt/kafka/bin/kafka-console-consumer.sh --bootstrap-server 10.4.7.104:9092 --topic tomcat-access --from-beginning

- 空白目录

- k8s

- k8s介绍和架构图

- 硬件环境和准备工作

- bind9-DNS服务部署

- 私有仓库harbor部署

- k8s-etcd部署

- api-server部署

- 配置apiserver L4代理

- controller-manager部署

- kube-scheduler部署

- node节点kubelet 部署

- node节点kube-proxy部署

- cfss-certinfo使用

- k8s网络-Flannel部署

- k8s网络优化

- CoreDNS部署

- k8s服务暴露之ingress

- 常用命令记录

- k8s-部署dashboard服务

- K8S平滑升级

- k8s服务交付

- k8s交付dubbo服务

- 服务架构图

- zookeeper服务部署

- Jenkins服务+共享存储nfs部署

- 安装配置maven和java运行时环境的底包镜像

- 使用blue ocean流水线构建镜像

- K8S生态--交付prometheus监控

- 介绍

- 部署4个exporter

- 部署prometheus server

- 部署grafana

- alert告警部署

- 日志收集ELK

- 制作Tomcat镜像

- 部署ElasticSearch

- 部署kafka和kafka-manager

- filebeat镜像制作

- 部署logstash

- 部署Kibana

- Apollo交付到Kubernetes集群

- Apollo简介

- 交付apollo-configservice

- 交付apollo-adminservice

- 交付apollo-portal

- k8s-CICD

- 集群整体架构

- 集群安装

- harbor仓库和nfs部署

- nginx-ingress-controller服务部署

- gitlab服务部署

- gitlab服务优化

- gitlab-runner部署

- dind服务部署

- CICD自动化服务devops演示

- k8s上服务日志收集