# 1. kubeadm部署k8s集群

生产部署k8s一般有两种, kubeadm(官方推荐)和二进制方式,二进制的方式就是以系统守护进程的方式部署集群组件,非常麻烦。kubeadm已容器的方式部署,方便快捷,需要docker、kubelet

## 1.1 环境准备

| 角色 | IP |

| --- | --- |

| master | 192.168.56.10 |

| node1 | 192.168.56.11 |

| node2 | 192.168.56.12 |

### 1.1.1

> 初始化域名(三台都需要执行)

```

cat >> /etc/hosts << EOF

192.168.56.10 master

192.168.56.11 node01

192.168.56.12 node02

EOF

```

### 1.1.2 关闭swap

```

swapoff -a # 临时关闭

sed -ri 's/.*swap.*/#&/' /etc/fstab #永久关闭

```

### 1.1.3 关闭防火墙

```

systemctl stop firewalld

systemctl disable firewalld

```

### 1.1.4 关闭selinux

```

sed -ir 's/SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

setenforce 0

```

&:引用前面的匹配

### 1.1.5 修改主机名

按照前面的节点规划,修改主机名

```

hostnamectl set-hostname master

hostnamectl set-hostname node01

hostnamectl set-hostname node02

```

### 1.1.6. `net.bridge.bridge-nf-call-iptables`设置成1

将桥接的IPv4流量传递到iptables的链

```

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

```

### 1.1.7 时间同步

```

yum install ntpdate -y

ntpdate -u asia.pool.ntp.org

```

### 1.1.8 确认`br_netfilter`被加载

```

[root@10 ~]# modprobe br_netfilter

[root@10 ~]# lsmod | grep br_netfilter

br_netfilter 22256 0

bridge 151336 2 br_netfilter,ebtable_broute

```

## 1.2 安装docker、kubeadm、kubectl

**首先要安装docker**所有机器都执行,参照docker安装

**1. 这是阿里yum源**

* `kubeadm`: the command to bootstrap the cluster.

* `kubelet`: the component that runs on all of the machines in your cluster and does things like starting pods and containers.

* `kubectl`: the command line util to talk to your cluster.

~~~

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

~~~

**2. 安装**

```

yum install -y kubelet-1.18.0 kubeadm-1.18.0 kubectl-1.18.0

```

**3. 设置开机自启**

```

systemctl enable kubelet

```

## 1.3 初始化master

> maseter =192.168.56.10

> 查看k8s安装版本

```

[root@master ~]# kubectl version

Client Version: version.Info{Major:"1", Minor:"21", GitVersion:"v1.21.2", GitCommit:"092fbfbf53427de67cac1e9fa54aaa09a28371d7", GitTreeState:"clean", BuildDate:"2021-06-16T12:59:11Z", GoVersion:"go1.16.5", Compiler:"gc", Platform:"linux/amd64"}

Unable to connect to the server: dial tcp: lookup localhost on 192.168.1.1:53: no such host

```

**1. 初始化,指定从阿里云下载镜像,初始化指定**

```

kubeadm init \

--apiserver-advertise-address=192.168.56.10 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.18.0 \

--service-cidr=10.1.0.0/16 \

--pod-network-cidr=10.244.0.0/16

```

或者配置策略

```

kubeadm config images pull --image-repository=registry.aliyuncs.com/google_containers

```

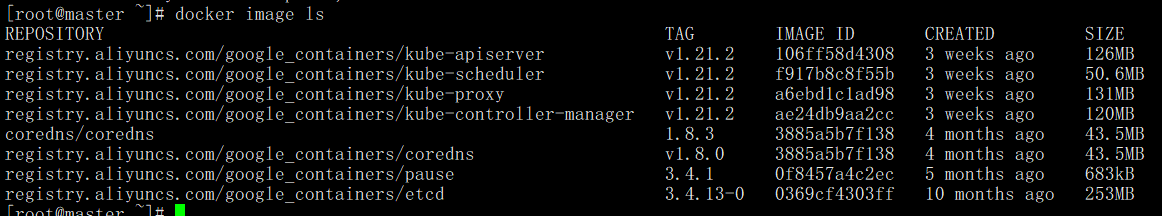

初始化会拉取k8s的master节点所需要镜像

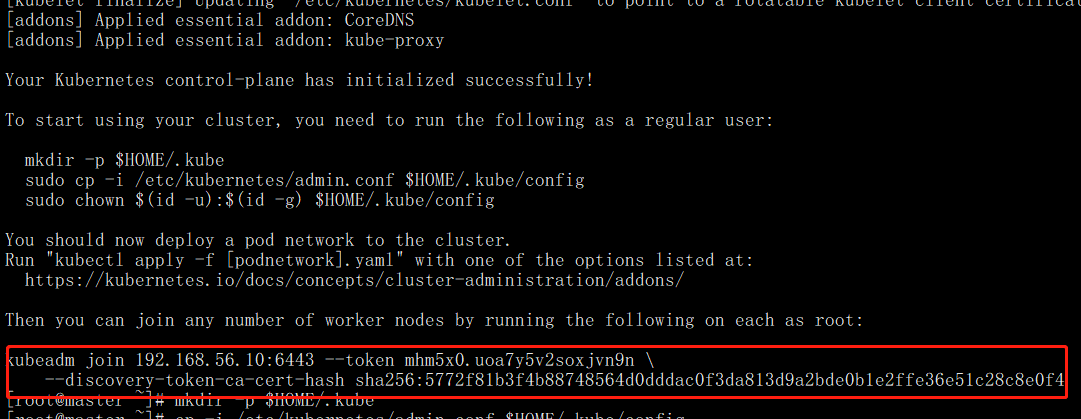

安装成功出现,work节点加入命令

```

kubeadm join 192.168.56.10:6443 --token u8n2h8.0g939ea9ocjb4kpr \

--discovery-token-ca-cert-hash sha256:f302e0db3e31526748349f67da28f8d099c7d05765059379d885df3f8df13d04

```

**2. 执行kubectl**

```

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

```

查看集群信息

```

[root@master ~]# kubectl cluster-info

Kubernetes control plane is running at https://192.168.56.10:6443

CoreDNS is running at https://192.168.56.10:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

```

**3. 加入node节点**

初始化完成后,会出现work节点加入命令,执行即可

```

kubeadm join 192.168.56.10:6443 --token mhm5x0.uoa7y5v2soxjvn9n \

--discovery-token-ca-cert-hash sha256:5772f81b3f4b88748564d0dddac0f3da813d9a2bde0b1e2ffe36e51c28c8e0f4

```

**4. 安装CNI插件**

由于限制不能访问,下载原文件再apply

```

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

```

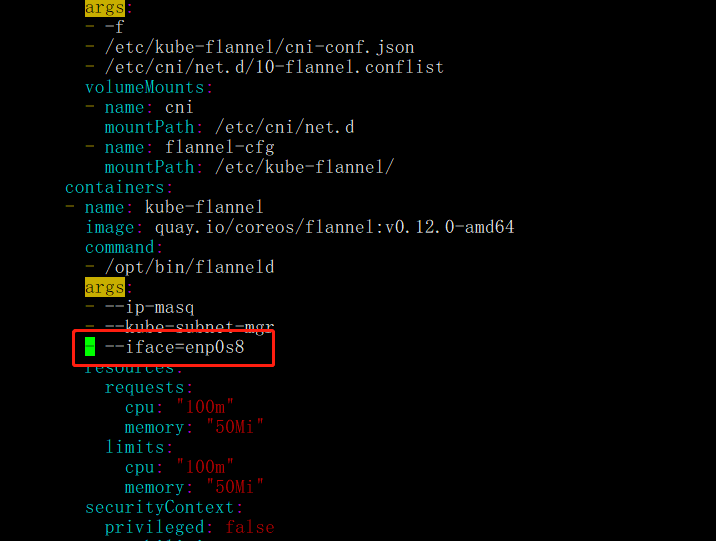

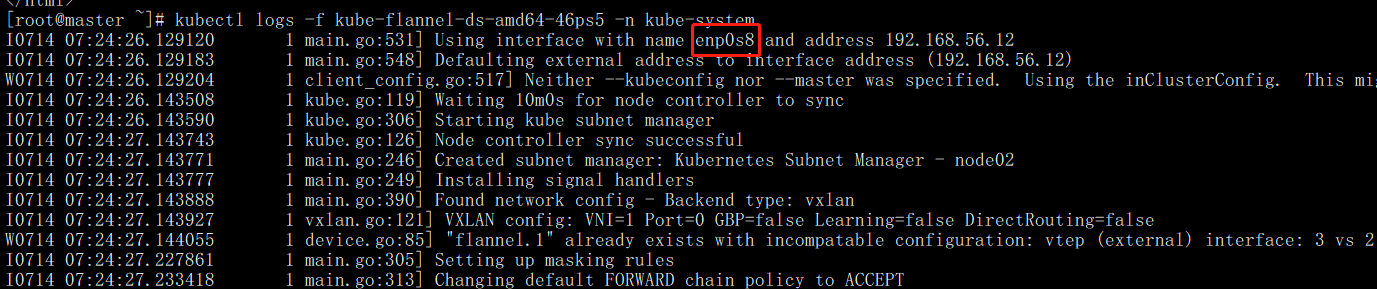

**注意:** ,如果是双网卡,需要制定可以转发数据包的网卡

```

vim kube-flannel.yml

# containers 配置下,添加网卡绑定

- --iface=enp0s8

```

如果已经部署了flannel,则需要`kubectl delete -f kube-flannel.yaml`删除之前的部署,然后执行`kubectl apply -f kube-flannel.yaml`重新部署

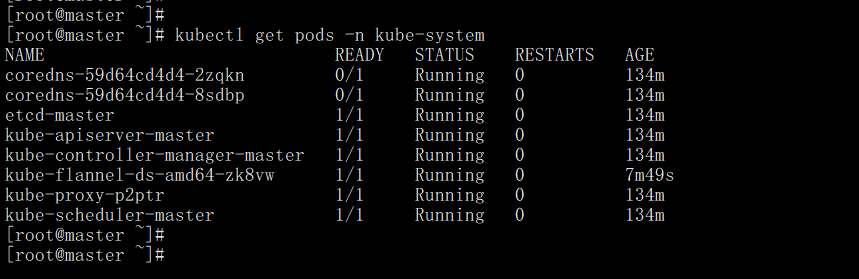

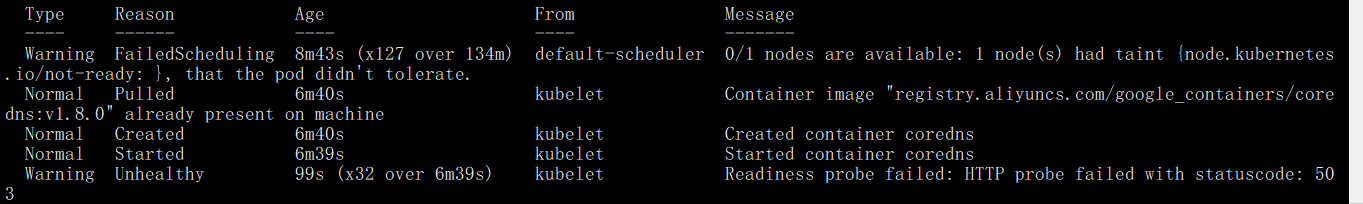

安装后,状态变化,但是仍有错误

`kubectl describe pod coredns-59d64cd4d4-2zqkn -n kube-system`

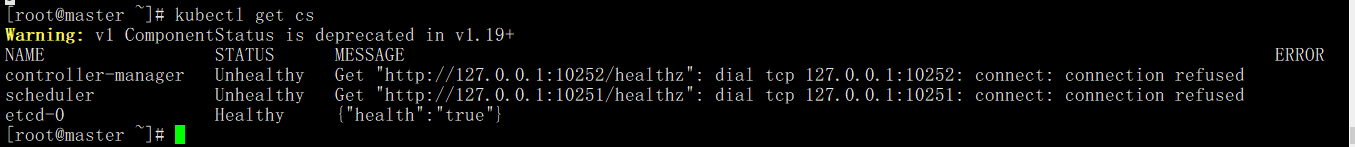

查看master各组件情况,发现controller-manager 、scheduler运行异常

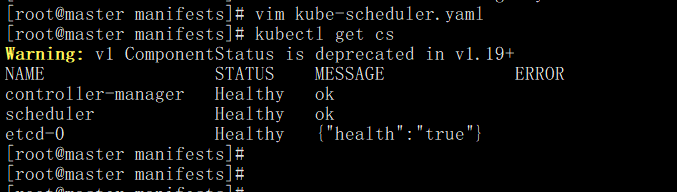

解决办法:

cd /etc/kubernetes/manifests

vim kube-controller-manager.yaml、kube-scheduler.yaml,将port=0注释掉

起一个pod实验

```

[root@master manifests]# kubectl run nginx --image=nginx --port=80

pod/nginx created

[root@master manifests]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx 0/1 ContainerCreating 0 8s

```

#### 由于虚拟机双网卡造成pod间无法通信

flannel默认绑定第一块网卡,作为转发。由于第一块网卡是外网nat虚拟网卡,无法转发数据包,所以修改默认配置

# 2. 高可用部署

# 3. 集群卸载

~~~

# 卸载服务

kubeadm reset

# 删除rpm包

rpm -qa|grep kube | xargs rpm --nodeps -e

# 删除容器及镜像

docker images -qa|xargs docker rmi -f

~~~

- docker

- docker安装

- 数据持久化

- 镜像管理

- Dockerfile

- 镜像的分层

- add copy

- 构建实例

- 镜像的导入导出

- 清理构建空间

- 配置阿里云加速器

- docker网络模型

- 本地仓库

- registry

- harbor

- IDEA部署docker

- 软件安装

- 安装es

- 安装MongoDB

- 安装rabbitmq

- 安装redis

- 安装nacos

- 安装mysql

- Minio

- 镜像中心

- kubernetes

- 1. 安装k8s

- 2.主要组件

- 3.污点

- 4.pod

- 5.控制器

- 6.网络

- 7.探针

- 8.安装Dashbord

- 9.secret

- 9.serviceAccount

- 10.service

- 资源清单

- kube-proxy

- flannel源文件

- 服务升级

- 笔记

- 镜像