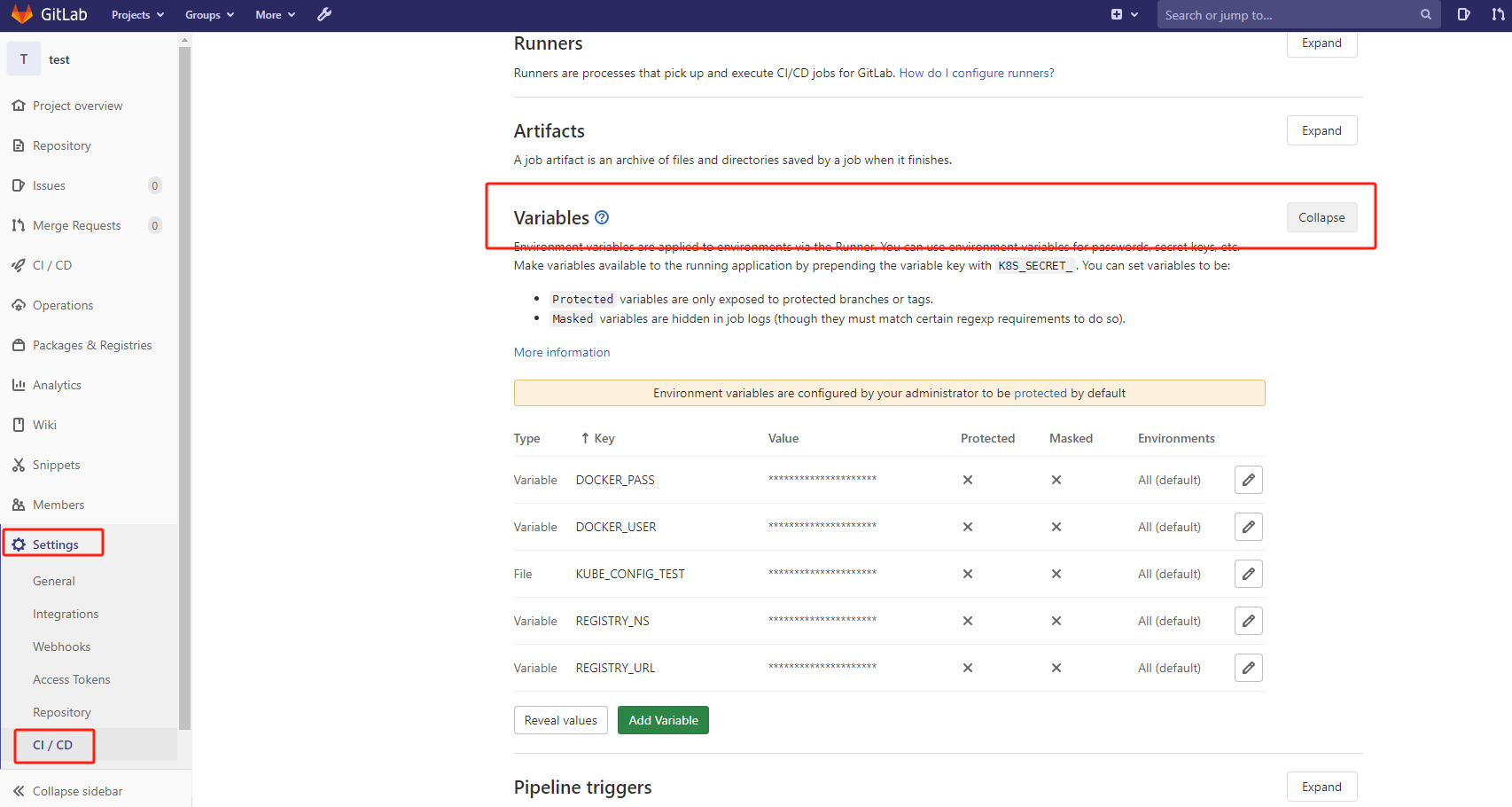

1、在代码仓库变量配置里面创建变量值:

```

Type Key Value State Masked

Variable DOCKER_USER admin 下面都关闭 下面都关闭

Variable DOCKER_PASS boge666

Variable REGISTRY_URL harbor.boge.com

Variable REGISTRY_NS product

File KUBE_CONFIG_TEST k8s相关config配置文件内容

```

这里需要注意的是:(Type变量对应的值,最后一个KUBE_CONFIG_TEST为:File)

KUBE_CONFIG_TEST值需要通过下面的shell脚本创建对应命名空间的conf文件:

```

#!/bin/bash

#

# This Script based on https://jeremievallee.com/2018/05/28/kubernetes-rbac-namespace-user.html

# K8s'RBAC doc: https://kubernetes.io/docs/reference/access-authn-authz/rbac

# Gitlab'CI/CD doc: hhttps://docs.gitlab.com/ee/user/permissions.html#running-pipelines-on-protected-branches

#

# In honor of the remarkable Windson

BASEDIR="$(dirname "$0")"

folder="$BASEDIR/kube_config"

echo -e "All namespaces is here: \n$(kubectl get ns|awk 'NR!=1{print $1}')"

echo "endpoint server if local network you can use $(kubectl cluster-info |awk '/Kubernetes/{print $NF}')"

namespace=$1

endpoint=$(echo "$2" | sed -e 's,https\?://,,g')

if [[ -z "$endpoint" || -z "$namespace" ]]; then

echo "Use "$(basename "$0")" NAMESPACE ENDPOINT";

exit 1;

fi

if ! kubectl get ns|awk 'NR!=1{print $1}'|grep -w "$namespace";then kubectl create ns "$namespace";else echo "namespace: $namespace was exist." ;fi

echo "---

apiVersion: v1

kind: ServiceAccount

metadata:

name: $namespace-user

namespace: $namespace

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: $namespace-user-full-access

namespace: $namespace

rules:

- apiGroups: ['', 'extensions', 'apps', 'metrics.k8s.io']

resources: ['*']

verbs: ['*']

- apiGroups: ['batch']

resources:

- jobs

- cronjobs

verbs: ['*']

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: $namespace-user-view

namespace: $namespace

subjects:

- kind: ServiceAccount

name: $namespace-user

namespace: $namespace

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: $namespace-user-full-access

---

# https://kubernetes.io/zh/docs/concepts/policy/resource-quotas/

apiVersion: v1

kind: ResourceQuota

metadata:

name: $namespace-compute-resources

namespace: $namespace

spec:

hard:

pods: "10"

services: "10"

persistentvolumeclaims: "5"

requests.cpu: "1"

requests.memory: 2Gi

limits.cpu: "2"

limits.memory: 4Gi" | kubectl apply -f -

kubectl -n $namespace describe quota $namespace-compute-resources

mkdir -p $folder

tokenName=$(kubectl get sa $namespace-user -n $namespace -o "jsonpath={.secrets[0].name}")

token=$(kubectl get secret $tokenName -n $namespace -o "jsonpath={.data.token}" | base64 --decode)

certificate=$(kubectl get secret $tokenName -n $namespace -o "jsonpath={.data['ca\.crt']}")

echo "apiVersion: v1

kind: Config

preferences: {}

clusters:

- cluster:

certificate-authority-data: $certificate

server: https://$endpoint

name: $namespace-cluster

users:

- name: $namespace-user

user:

as-user-extra: {}

client-key-data: $certificate

token: $token

contexts:

- context:

cluster: $namespace-cluster

namespace: $namespace

user: $namespace-user

name: $namespace

current-context: $namespace" > $folder/$namespace.kube.conf

```

2、接下来就是创建编包的Dockerfile文件,这里选择编一个goalng语音的包。

```

# Dockerfile

# a demon for containerize golang web apps

#

# @author: boge

# @mail: Wx:bogeit

# stage 1: build src code to binary

FROM harbor.boge.com/library/golang:1.19.11-alpine3.18 as builder

ENV GOPROXY https://goproxy.cn

COPY *.go /app/

RUN cd /app \

&& go mod init github.com/bogeit/golangtest \

&& go mod tidy \

&& CGO_ENABLED=0 GOOS=linux GOARCH=amd64 go build -ldflags "-s -w" -o hellogo .

# stage 2: use alpine as base image

FROM alpine:3.18

RUN sed -i 's/dl-cdn.alpinelinux.org/mirrors.aliyun.com/g' /etc/apk/repositories && \

apk update && \

apk --no-cache add tzdata ca-certificates && \

cp -f /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && \

# apk del tzdata && \

rm -rf /var/cache/apk/*

COPY --from=builder /app/hellogo /hellogo

CMD ["/hellogo"]

```

hellogo.go语音的代码准备

```

// hellogo.go

package main

import (

"fmt"

"log"

"math/rand"

"net/http"

"time"

)

var appVersion = "23.10.27.01" //Default/fallback version

func NowTime(t time.Time) string {

nowtime := fmt.Sprintf("%4d-%02d-%02d %02d:%02d:%02d",

t.Year(), t.Month(), t.Day(),

t.Hour(), t.Minute(), t.Second())

return nowtime

}

func GetRandomString(l int) string {

str := "0123456789abcdefghijklmnopqrstuvwxyz"

bytes := []byte(str)

result := []byte{}

r := rand.New(rand.NewSource(time.Now().UnixNano()))

for i := 0; i < l; i++ {

result = append(result, bytes[r.Intn(len(bytes))])

}

return string(result)

}

func getFrontpage(w http.ResponseWriter, r *http.Request) {

fmt.Fprintf(w, "Hello, Go! I'm instance %s running version %s at %s\n", GetRandomString(6), appVersion, NowTime(time.Now()))

}

func health(w http.ResponseWriter, r *http.Request) {

w.WriteHeader(http.StatusOK)

if http.StatusOK == 200{

fmt.Fprintf(w, "%s\n", "ok")

}

}

func getVersion(w http.ResponseWriter, r *http.Request) {

fmt.Fprintf(w, "%s\n", appVersion)

}

func main() {

rand.Seed(time.Now().UTC().UnixNano())

http.HandleFunc("/", getFrontpage)

http.HandleFunc("/health", health)

http.HandleFunc("/version", getVersion)

log.Fatal(http.ListenAndServe(":5000", nil))

}

```

准备项目自动化配置文件.gitlab-ci.yml

文件中的DOCKER_HOST的ip需要修改

[root@node-111 test]# kubectl -n kube-system get svc| grep dind

dind ClusterIP 10.68.11.146 <none> 2375/TCP 21h

```

stages:

- build

- deploy

- rollback

# tag name need: 20.11.21.01

variables:

namecb: "flask-test"

svcport: "5000"

replicanum: "2"

ingress: "flask-test.boge.com"

certname: "mytls"

CanarylIngressNum: "20"

.deploy_k8s: &deploy_k8s |

if [ $CANARY_CB -eq 1 ];then cp -arf .project-name-canary.yaml ${namecb}-${CI_COMMIT_TAG}.yaml; sed -ri "s+CanarylIngressNum+${CanarylIngressNum}+g" ${namecb}-${CI_COMMIT_TAG}.yaml; sed -ri "s+NomalIngressNum+$(expr 100 - ${CanarylIngressNum})+g" ${namecb}-${CI_COMMIT_TAG}.yaml ;else cp -arf .project-name.yaml ${namecb}-${CI_COMMIT_TAG}.yaml;fi

sed -ri "s+projectnamecb.boge.com+${ingress}+g" ${namecb}-${CI_COMMIT_TAG}.yaml

sed -ri "s+projectnamecb+${namecb}+g" ${namecb}-${CI_COMMIT_TAG}.yaml

sed -ri "s+5000+${svcport}+g" ${namecb}-${CI_COMMIT_TAG}.yaml

sed -ri "s+replicanum+${replicanum}+g" ${namecb}-${CI_COMMIT_TAG}.yaml

sed -ri "s+mytls+${certname}+g" ${namecb}-${CI_COMMIT_TAG}.yaml

sed -ri "s+mytagcb+${CI_COMMIT_TAG}+g" ${namecb}-${CI_COMMIT_TAG}.yaml

sed -ri "s+harbor.boge.com/library+${IMG_URL}+g" ${namecb}-${CI_COMMIT_TAG}.yaml

cat ${namecb}-${CI_COMMIT_TAG}.yaml

[ -d ~/.kube ] || mkdir ~/.kube

echo "$KUBE_CONFIG" > ~/.kube/config

if [ $NORMAL_CB -eq 1 ];then if kubectl get deployments.|grep -w ${namecb}-canary &>/dev/null;then kubectl delete deployments.,svc ${namecb}-canary ;fi;fi

kubectl apply -f ${namecb}-${CI_COMMIT_TAG}.yaml --record

echo

echo

echo "============================================================="

echo " Rollback Indx List"

echo "============================================================="

kubectl rollout history deployment ${namecb}|tail -5|awk -F"[ =]+" '{print $1"\t"$5}'|sed '$d'|sed '$d'|sort -r|awk '{print $NF}'|awk '$0=""NR". "$0'

.rollback_k8s: &rollback_k8s |

[ -d ~/.kube ] || mkdir ~/.kube

echo "$KUBE_CONFIG" > ~/.kube/config

last_version_command=$( kubectl rollout history deployment ${namecb}|tail -5|awk -F"[ =]+" '{print $1"\t"$5}'|sed '$d'|sed '$d'|tail -${ROLL_NUM}|head -1 )

last_version_num=$( echo ${last_version_command}|awk '{print $1}' )

last_version_name=$( echo ${last_version_command}|awk '{print $2}' )

kubectl rollout undo deployment ${namecb} --to-revision=$last_version_num

echo $last_version_num

echo $last_version_name

kubectl rollout history deployment ${namecb}

build:

stage: build

retry: 2

variables:

# use dind.yaml to depoy dind'service on k8s

DOCKER_HOST: tcp://10.68.11.146:2375/

DOCKER_DRIVER: overlay2

DOCKER_TLS_CERTDIR: ""

##services:

##- docker:dind

before_script:

- docker login ${REGISTRY_URL} -u "$DOCKER_USER" -p "$DOCKER_PASS"

script:

- docker pull ${REGISTRY_URL}/${REGISTRY_NS}/${namecb}:latest || true

- docker build --network host --cache-from ${REGISTRY_URL}/${REGISTRY_NS}/${namecb}:latest --tag ${REGISTRY_URL}/${REGISTRY_NS}/${namecb}:$CI_COMMIT_TAG --tag ${REGISTRY_URL}/${REGISTRY_NS}/${namecb}:latest .

- docker push ${REGISTRY_URL}/${REGISTRY_NS}/${namecb}:$CI_COMMIT_TAG

- docker push ${REGISTRY_URL}/${REGISTRY_NS}/${namecb}:latest

after_script:

- docker logout ${REGISTRY_URL}

tags:

- "docker"

only:

- tags

#--------------------------K8S DEPLOY--------------------------------------------------

BOGE-deploy:

stage: deploy

image: harbor.boge.com/library/kubectl:v1.19.9

variables:

KUBE_CONFIG: "$KUBE_CONFIG_TEST"

IMG_URL: "${REGISTRY_URL}/${REGISTRY_NS}"

NORMAL_CB: 1

script:

- *deploy_k8s

when: manual

only:

- tags

# canary start

BOGE-canary-deploy:

stage: deploy

image: harbor.boge.com/library/kubectl:v1.19.9

variables:

KUBE_CONFIG: "$KUBE_CONFIG_TEST"

IMG_URL: "${REGISTRY_URL}/${REGISTRY_NS}"

CANARY_CB: 1

script:

- *deploy_k8s

when: manual

only:

- tags

# canary end

BOGE-rollback-1:

stage: rollback

image: harbor.boge.com/library/kubectl:v1.19.9

variables:

KUBE_CONFIG: "$KUBE_CONFIG_TEST"

ROLL_NUM: 1

script:

- *rollback_k8s

when: manual

only:

- tags

BOGE-rollback-2:

stage: rollback

image: harbor.boge.com/library/kubectl:v1.19.9

variables:

KUBE_CONFIG: "$KUBE_CONFIG_TEST"

ROLL_NUM: 2

script:

- *rollback_k8s

when: manual

only:

- tags

BOGE-rollback-3:

stage: rollback

image: harbor.boge.com/library/kubectl:v1.19.9

variables:

KUBE_CONFIG: "$KUBE_CONFIG_TEST"

ROLL_NUM: 3

script:

- *rollback_k8s

when: manual

only:

- tags

```

准备k8s的deployment模板文件.project-name.yaml

```

---

# SVC

kind: Service

apiVersion: v1

metadata:

labels:

kae: "true"

kae-app-name: projectnamecb

kae-type: app

name: projectnamecb

spec:

selector:

kae: "true"

kae-app-name: projectnamecb

kae-type: app

ports:

- name: http-port

port: 80

protocol: TCP

targetPort: 5000

# nodePort: 12345

# type: NodePort

---

# Ingress

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

labels:

kae: "true"

kae-app-name: projectnamecb

kae-type: app

name: projectnamecb

spec:

tls:

- hosts:

- projectnamecb.boge.com

secretName: mytls

rules:

- host: projectnamecb.boge.com

http:

paths:

- path: /

backend:

serviceName: projectnamecb

servicePort: 80

---

# Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: projectnamecb

labels:

kae: "true"

kae-app-name: projectnamecb

kae-type: app

spec:

replicas: replicanum

selector:

matchLabels:

kae-app-name: projectnamecb

template:

metadata:

labels:

kae: "true"

kae-app-name: projectnamecb

kae-type: app

spec:

containers:

- name: projectnamecb

image: harbor.boge.com/library/projectnamecb:mytagcb

env:

- name: TZ

value: Asia/Shanghai

ports:

- containerPort: 5000

readinessProbe:

httpGet:

scheme: HTTP

path: /

port: 5000

initialDelaySeconds: 10

periodSeconds: 5

timeoutSeconds: 3

successThreshold: 1

failureThreshold: 3

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 5000

initialDelaySeconds: 10

periodSeconds: 5

timeoutSeconds: 3

successThreshold: 1

failureThreshold: 3

resources:

requests:

cpu: 0.3

memory: 0.5Gi

limits:

cpu: 0.3

memory: 0.5Gi

imagePullSecrets:

- name: boge-secret

```

准备好K8S上金丝雀部署的模板文件.project-name-canary.yaml

```

---

# SVC

kind: Service

apiVersion: v1

metadata:

labels:

kae: "true"

kae-app-name: projectnamecb-canary

kae-type: app

name: projectnamecb-canary

spec:

selector:

kae: "true"

kae-app-name: projectnamecb-canary

kae-type: app

ports:

- name: http-port

port: 80

protocol: TCP

targetPort: 5000

# nodePort: 12345

# type: NodePort

---

# Ingress

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

labels:

kae: "true"

kae-app-name: projectnamecb-canary

kae-type: app

name: projectnamecb

annotations:

nginx.ingress.kubernetes.io/service-weight: |

projectnamecb: NomalIngressNum, projectnamecb-canary: CanarylIngressNum

spec:

tls:

- hosts:

- projectnamecb.boge.com

secretName: mytls

rules:

- host: projectnamecb.boge.com

http:

paths:

- path: /

backend:

serviceName: projectnamecb

servicePort: 80

- path: /

backend:

serviceName: projectnamecb-canary

servicePort: 80

---

# Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: projectnamecb-canary

labels:

kae: "true"

kae-app-name: projectnamecb-canary

kae-type: app

spec:

replicas: replicanum

selector:

matchLabels:

kae-app-name: projectnamecb-canary

template:

metadata:

labels:

kae: "true"

kae-app-name: projectnamecb-canary

kae-type: app

spec:

containers:

- name: projectnamecb-canary

image: harbor.boge.com/library/projectnamecb:mytagcb

env:

- name: TZ

value: Asia/Shanghai

ports:

- containerPort: 5000

readinessProbe:

httpGet:

scheme: HTTP

path: /

port: 5000

initialDelaySeconds: 10

periodSeconds: 5

timeoutSeconds: 3

successThreshold: 1

failureThreshold: 3

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 5000

initialDelaySeconds: 10

periodSeconds: 5

timeoutSeconds: 3

successThreshold: 1

failureThreshold: 3

resources:

requests:

cpu: 0.3

memory: 0.5Gi

limits:

cpu: 0.3

memory: 0.5Gi

imagePullSecrets:

- name: boge-secret

```

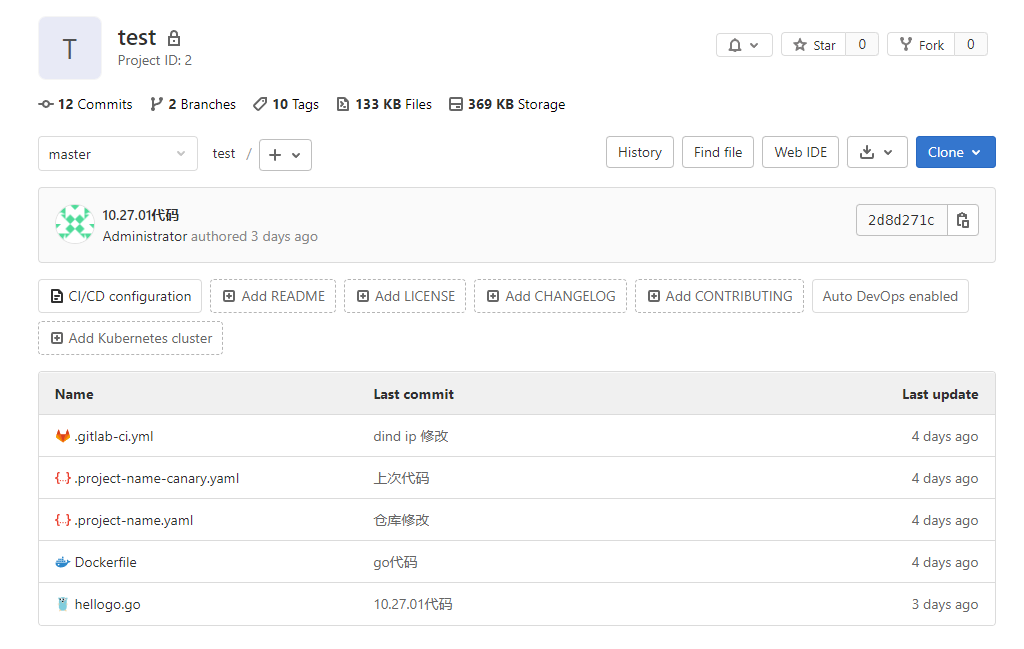

到这里需要的文件都已经全部上传到给上面了

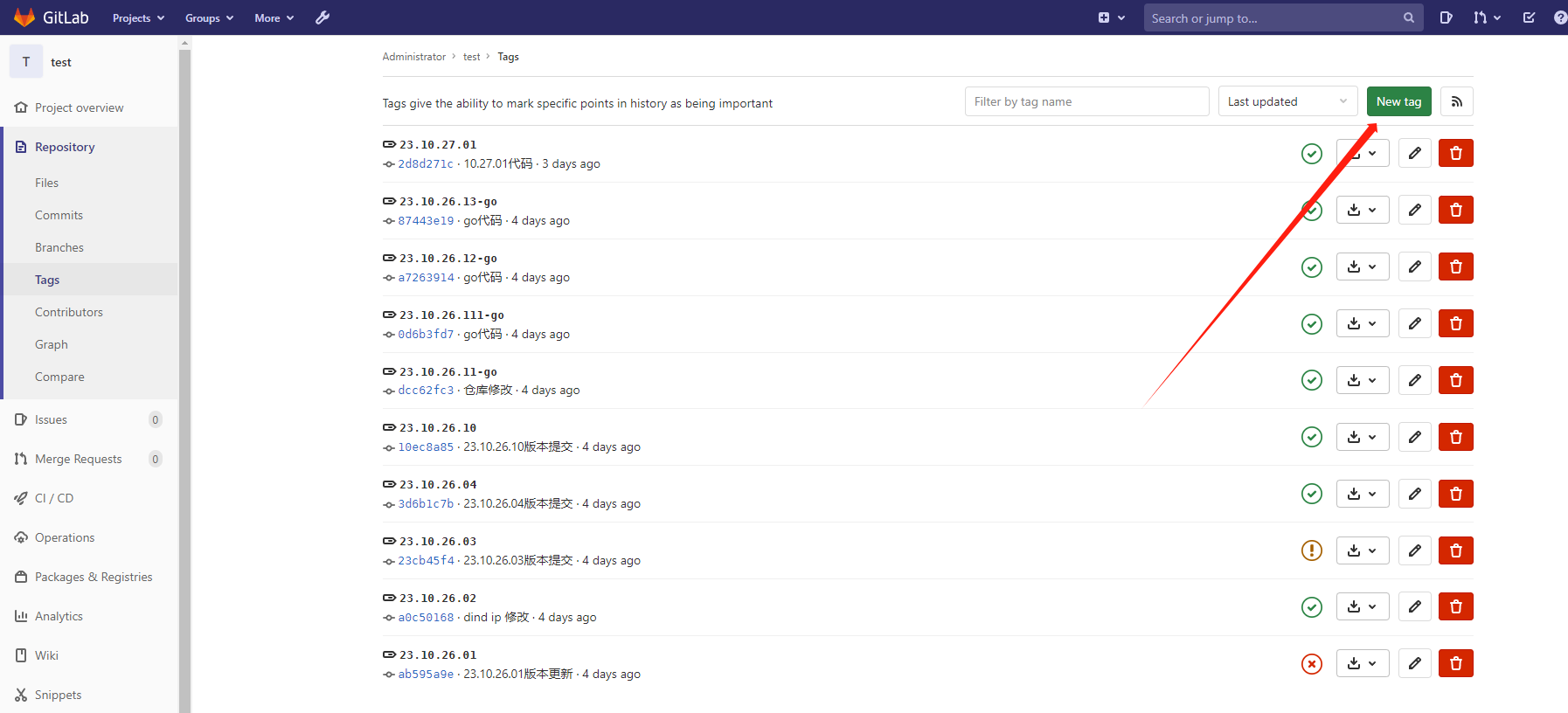

接下来就可以创建一个tag去编包:

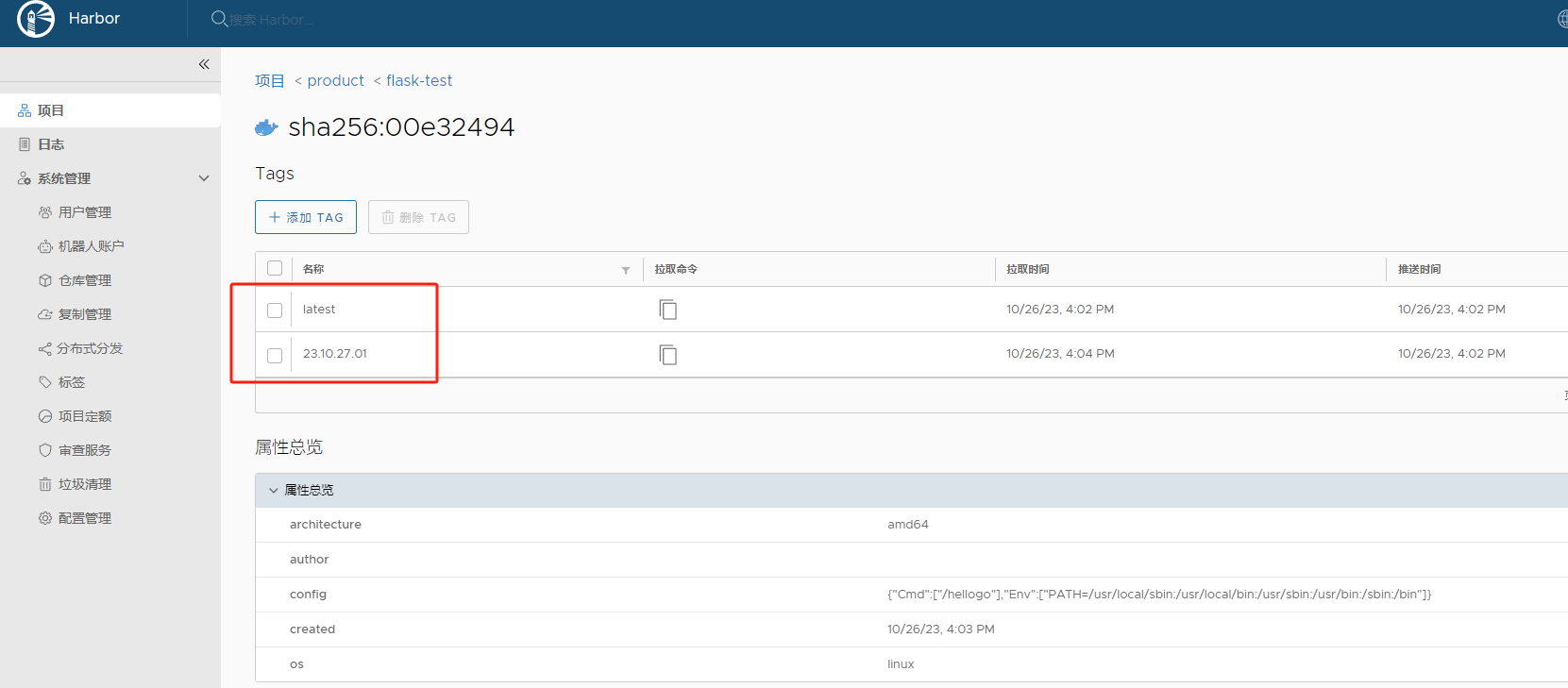

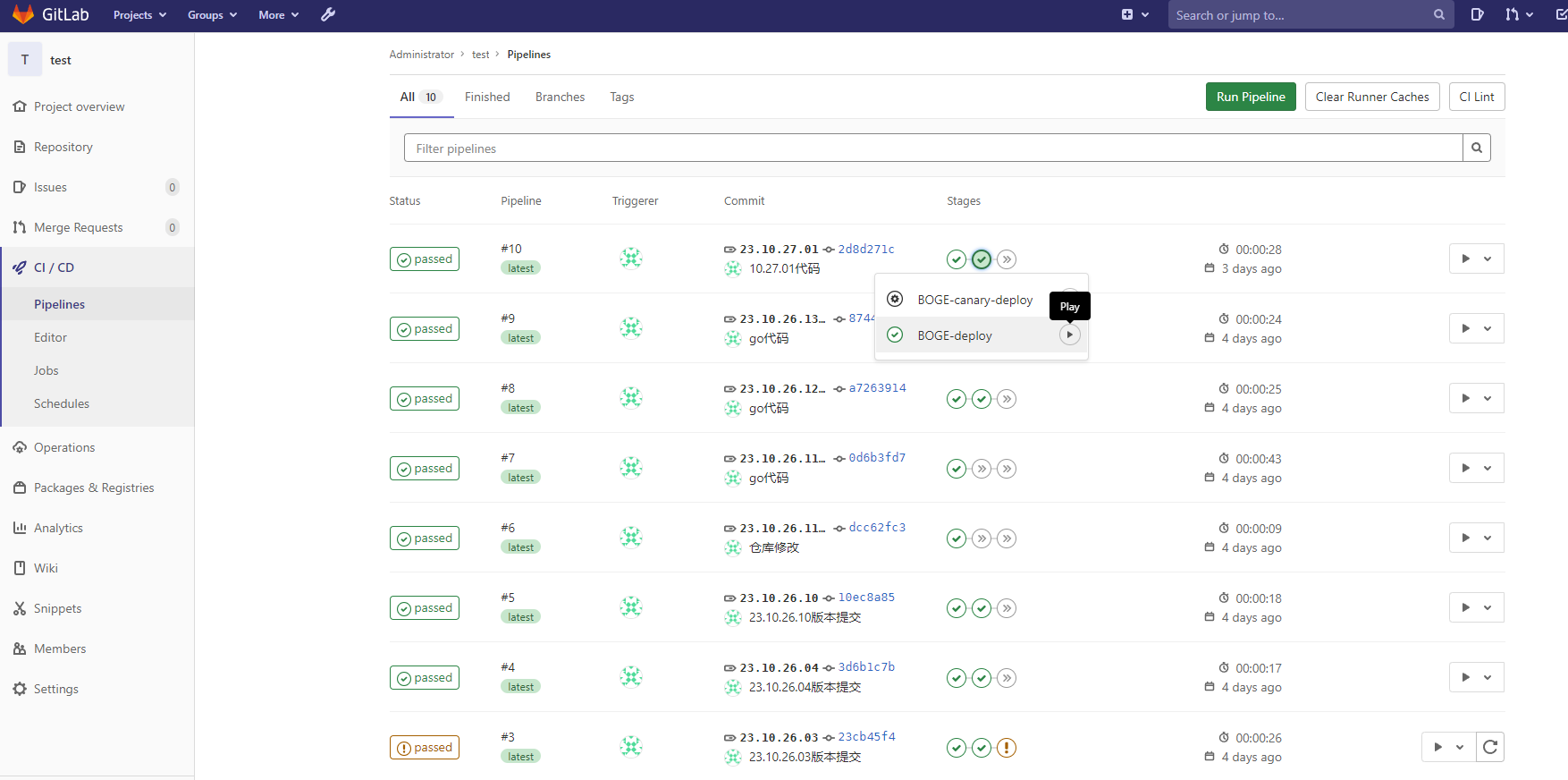

包编完以后可以去仓库查看,然后可以点击Play去发布。

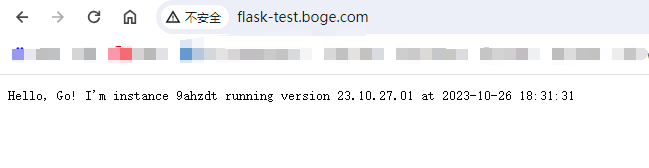

发布完成以后可以去集群进行查看。

也可以本地hosts文件添加解析进行测试。

- 空白目录

- k8s

- k8s介绍和架构图

- 硬件环境和准备工作

- bind9-DNS服务部署

- 私有仓库harbor部署

- k8s-etcd部署

- api-server部署

- 配置apiserver L4代理

- controller-manager部署

- kube-scheduler部署

- node节点kubelet 部署

- node节点kube-proxy部署

- cfss-certinfo使用

- k8s网络-Flannel部署

- k8s网络优化

- CoreDNS部署

- k8s服务暴露之ingress

- 常用命令记录

- k8s-部署dashboard服务

- K8S平滑升级

- k8s服务交付

- k8s交付dubbo服务

- 服务架构图

- zookeeper服务部署

- Jenkins服务+共享存储nfs部署

- 安装配置maven和java运行时环境的底包镜像

- 使用blue ocean流水线构建镜像

- K8S生态--交付prometheus监控

- 介绍

- 部署4个exporter

- 部署prometheus server

- 部署grafana

- alert告警部署

- 日志收集ELK

- 制作Tomcat镜像

- 部署ElasticSearch

- 部署kafka和kafka-manager

- filebeat镜像制作

- 部署logstash

- 部署Kibana

- Apollo交付到Kubernetes集群

- Apollo简介

- 交付apollo-configservice

- 交付apollo-adminservice

- 交付apollo-portal

- k8s-CICD

- 集群整体架构

- 集群安装

- harbor仓库和nfs部署

- nginx-ingress-controller服务部署

- gitlab服务部署

- gitlab服务优化

- gitlab-runner部署

- dind服务部署

- CICD自动化服务devops演示

- k8s上服务日志收集