*****

## 1 使用pipeline

从pipeline的字典形可以看出来,pipeline可以有多个,而且确实pipeline能够定义多个

为什么需要多个pipeline:

1 可能会有多个spider,不同的pipeline处理不同的item的内容

2 一个spider的内容可以要做不同的操作,比如存入不同的数据库中

注意:

1 pipeline的权重越小优先级越高

2 pipeline中process\_item方法名不能修改为其他的名称

## 2 logging模块的使用

爬虫文件

```

import scrapy

import logging

logger = logging.getLogger(__name__)

class QbSpider(scrapy.Spider):

name = 'qb'

allowed_domains = ['qiushibaike.com']

start_urls = ['http://qiushibaike.com/']

def parse(self, response):

for i in range(10):

item = {}

item['content'] = "haha"

# logging.warning(item)

logger.warning(item)

yield item

```

pipeline文件

```

import logging

logger = logging.getLogger(__name__)

class MyspiderPipeline(object):

def process_item(self, item, spider):

# print(item)

logger.warning(item)

item['hello'] = 'world'

return item

```

保存到本地,在setting文件中`LOG_FILE = './log.log'`

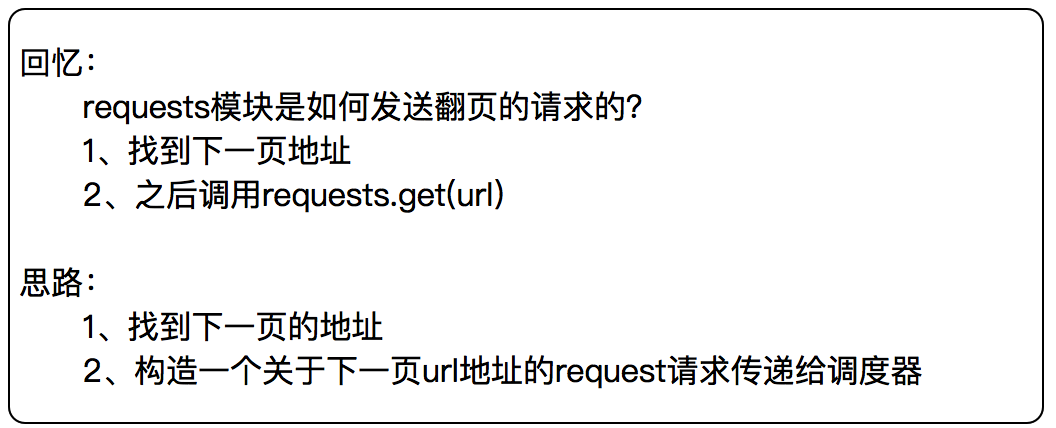

## 回顾

问题来了:如何实现翻页请求

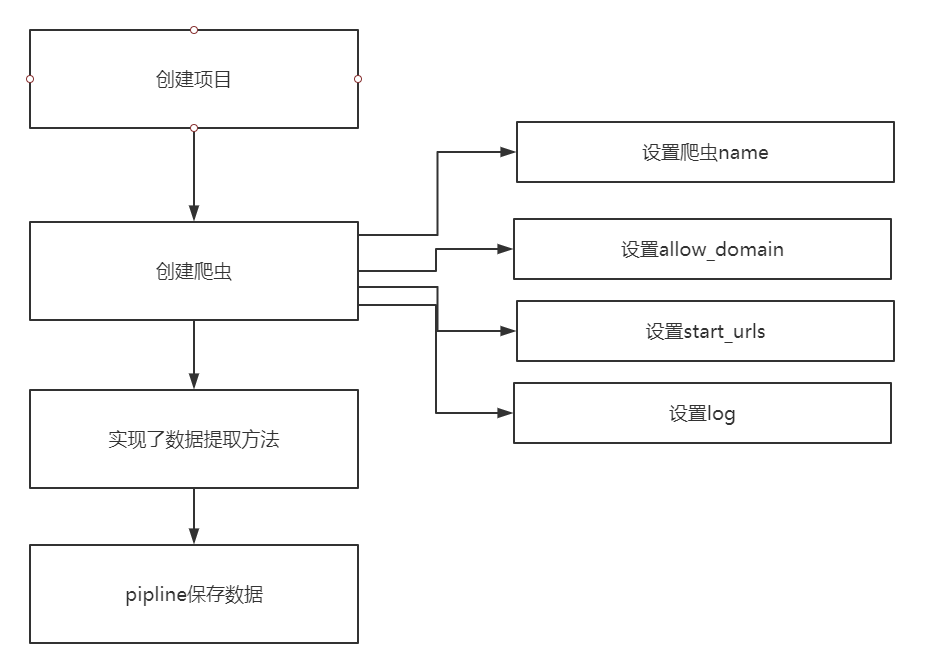

### 3 腾讯爬虫

通过爬取腾讯招聘的页面的招聘信息,学习如何实现翻页请求

http://hr.tencent.com/position.php

```

创建项目

scrapy startproject tencent

创建爬虫

scrapy genspider hr tencent.com

```

#### scrapy.Request知识点

```

scrapy.Request(url, callback=None, method='GET', headers=None, body=None,cookies=None, meta=None, encoding='utf-8', priority=0,

dont_filter=False, errback=None, flags=None)

常用参数为:

callback:指定传入的URL交给那个解析函数去处理

meta:实现不同的解析函数中传递数据,meta默认会携带部分信息,比如下载延迟,请求深度

dont_filter:让scrapy的去重不会过滤当前URL,scrapy默认有URL去重功能,对需要重复请求的URL有重要用途

```

## 4 item的介绍和使用

```

items.py

import scrapy

class TencentItem(scrapy.Item):

# define the fields for your item here like:

title = scrapy.Field()

position = scrapy.Field()

date = scrapy.Field()

```

### 5 阳光政务平台

http://wz.sun0769.com/index.php/question/questionType?type=4&page=0

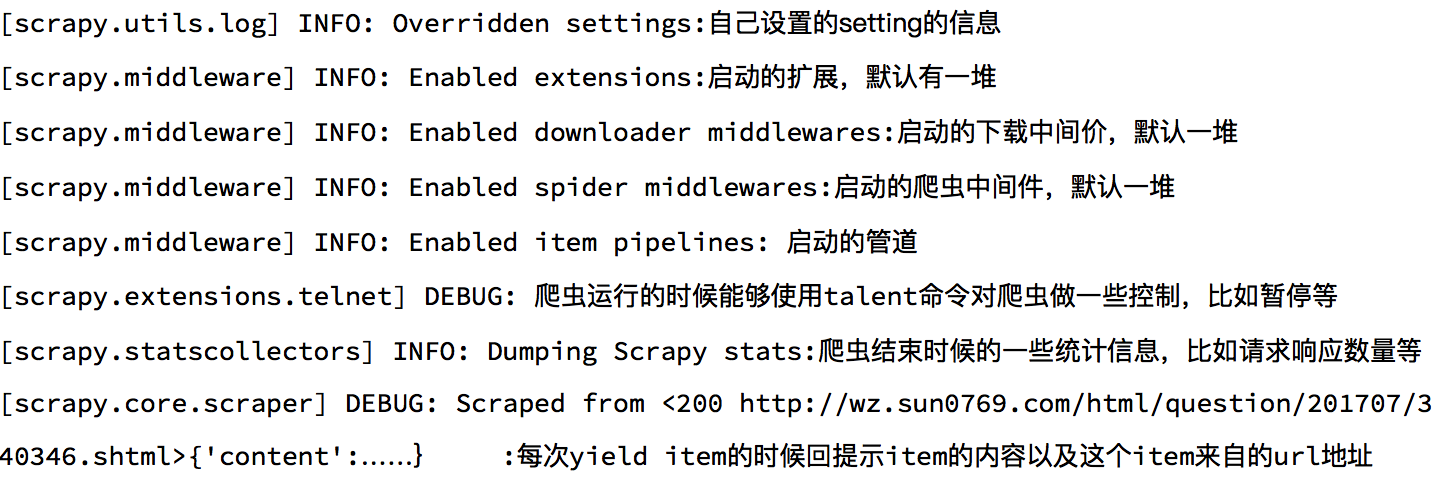

## 6 debug信息的认识

```

2019-01-19 09:50:48 [scrapy.utils.log] INFO: Scrapy 1.5.1 started (bot: tencent)

2019-01-19 09:50:48 [scrapy.utils.log] INFO: Versions: lxml 4.2.5.0, libxml2 2.9.5, cssselect 1.0.3, parsel 1.5.0, w3lib 1.19.0, Twisted 18.9.0, Python 3.6.5 (v3

.6.5:f59c0932b4, Mar 28 2018, 17:00:18) [MSC v.1900 64 bit (AMD64)], pyOpenSSL 18.0.0 (OpenSSL 1.1.0i 14 Aug 2018), cryptography 2.3.1, Platform Windows-10-10.0

.17134-SP0 ### 爬虫scrpay框架依赖的相关模块和平台的信息

2019-01-19 09:50:48 [scrapy.crawler] INFO: Overridden settings: {'BOT_NAME': 'tencent', 'NEWSPIDER_MODULE': 'tencent.spiders', 'ROBOTSTXT_OBEY': True, 'SPIDER_MO

DULES': ['tencent.spiders'], 'USER_AGENT': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_5) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/59.0.3071.115 Safari/53

7.36'} ### 自定义的配置信息哪些被应用了

2019-01-19 09:50:48 [scrapy.middleware] INFO: Enabled extensions: ### 插件信息

['scrapy.extensions.corestats.CoreStats',

'scrapy.extensions.telnet.TelnetConsole',

'scrapy.extensions.logstats.LogStats']

2019-01-19 09:50:48 [scrapy.middleware] INFO: Enabled downloader middlewares: ### 启动的下载器中间件

['scrapy.downloadermiddlewares.robotstxt.RobotsTxtMiddleware',

'scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware',

'scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware',

'scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware',

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware',

'scrapy.downloadermiddlewares.retry.RetryMiddleware',

'scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware',

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware',

'scrapy.downloadermiddlewares.redirect.RedirectMiddleware',

'scrapy.downloadermiddlewares.cookies.CookiesMiddleware',

'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware',

'scrapy.downloadermiddlewares.stats.DownloaderStats']

2019-01-19 09:50:48 [scrapy.middleware] INFO: Enabled spider middlewares: ### 启动的爬虫中间件

['scrapy.spidermiddlewares.httperror.HttpErrorMiddleware',

'scrapy.spidermiddlewares.offsite.OffsiteMiddleware',

'scrapy.spidermiddlewares.referer.RefererMiddleware',

'scrapy.spidermiddlewares.urllength.UrlLengthMiddleware',

'scrapy.spidermiddlewares.depth.DepthMiddleware']

2019-01-19 09:50:48 [scrapy.middleware] INFO: Enabled item pipelines: ### 启动的管道

['tencent.pipelines.TencentPipeline']

2019-01-19 09:50:48 [scrapy.core.engine] INFO: Spider opened ### 开始爬去数据

2019-01-19 09:50:48 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2019-01-19 09:50:48 [scrapy.extensions.telnet] DEBUG: Telnet console listening on 127.0.0.1:6023

2019-01-19 09:50:51 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://hr.tencent.com/robots.txt> (referer: None) ### 抓取robots协议内容

2019-01-19 09:50:51 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://hr.tencent.com/position.php?&start=#a0> (referer: None) ### start_url发起请求

2019-01-19 09:50:51 [scrapy.spidermiddlewares.offsite] DEBUG: Filtered offsite request to 'hr.tencent.com': <GET https://hr.tencent.com/position.php?&start=> ### 提示错误,爬虫中通过yeid交给引擎的请求会经过爬虫中间件,由于请求的url超出allowed_domain的范围,被offsitmiddleware 拦截了

2019-01-19 09:50:51 [scrapy.core.engine] INFO: Closing spider (finished) ### 爬虫关闭

2019-01-19 09:50:51 [scrapy.statscollectors] INFO: Dumping Scrapy stats: ### 本次爬虫的信息统计

{'downloader/request_bytes': 630,

'downloader/request_count': 2,

'downloader/request_method_count/GET': 2,

'downloader/response_bytes': 4469,

'downloader/response_count': 2,

'downloader/response_status_count/200': 2,

'finish_reason': 'finished',

'finish_time': datetime.datetime(2019, 1, 19, 1, 50, 51, 558634),

'log_count/DEBUG': 4,

'log_count/INFO': 7,

'offsite/domains': 1,

'offsite/filtered': 12,

'request_depth_max': 1,

'response_received_count': 2,

'scheduler/dequeued': 1,

'scheduler/dequeued/memory': 1,

'scheduler/enqueued': 1,

'scheduler/enqueued/memory': 1,

'start_time': datetime.datetime(2019, 1, 19, 1, 50, 48, 628465)}

2019-01-19 09:50:51 [scrapy.core.engine] INFO: Spider closed (finished)

```

## Scrapy深入之scrapy shell

Scrapy shell是一个交互终端,我们可以在未启动spider的情况下尝试及调试代码,也可以用来测试XPath表达式

使用方法:

scrapy shell http://www.itcast.cn/channel/teacher.shtml

```

response.url:当前相应的URL地址

response.request.url:当前相应的请求的URL地址

response.headers:响应头

response.body:响应体,也就是HTML代码,默认是byte类型

response.requests.headers:当前响应的请求头

```

## scrapy settings

为什么需要配置文件:

> 配置文件存放一些公共的变量(比如数据库的地址,账号密码等)

> 方便自己和别人修改

> 一般用全大写字母命名变量名 SQL\_HOST = '192.168.0.1'

settings文件详细信息:[https://www.cnblogs.com/cnkai/p/7399573.html](https://www.cnblogs.com/cnkai/p/7399573.html)