*****

## Scrapy-分布式

### 什么是scrapy_redis

```

scrapy_redis:Redis-based components for scrapy

```

github地址:https://github.com/rmax/scrapy-redis

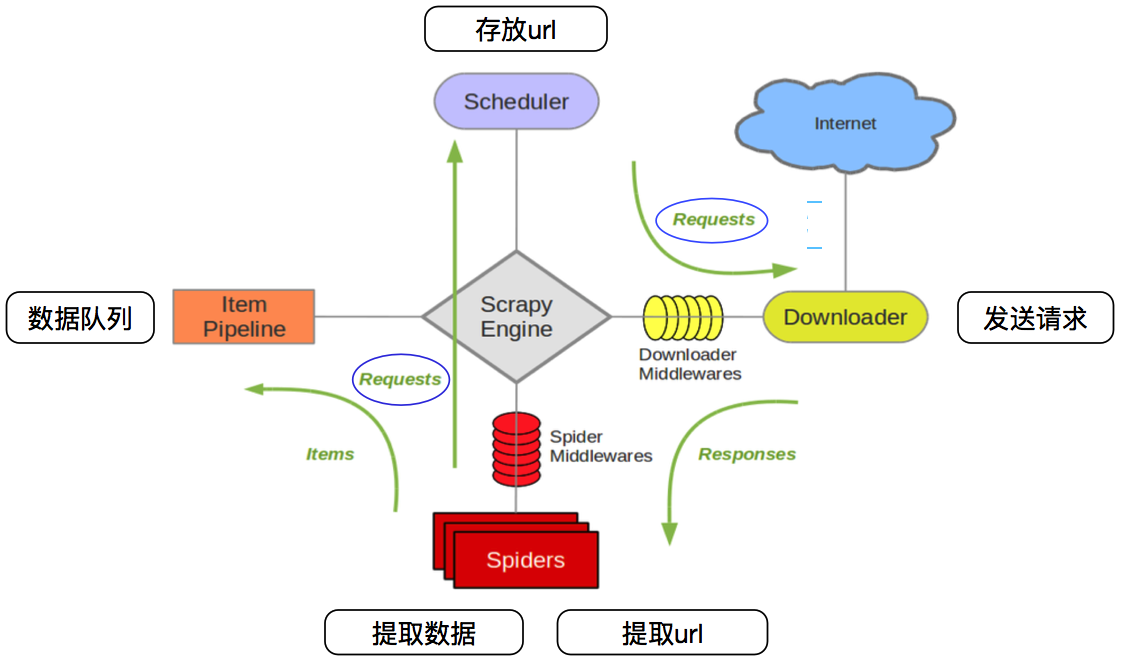

### 回顾scrapy工作流程

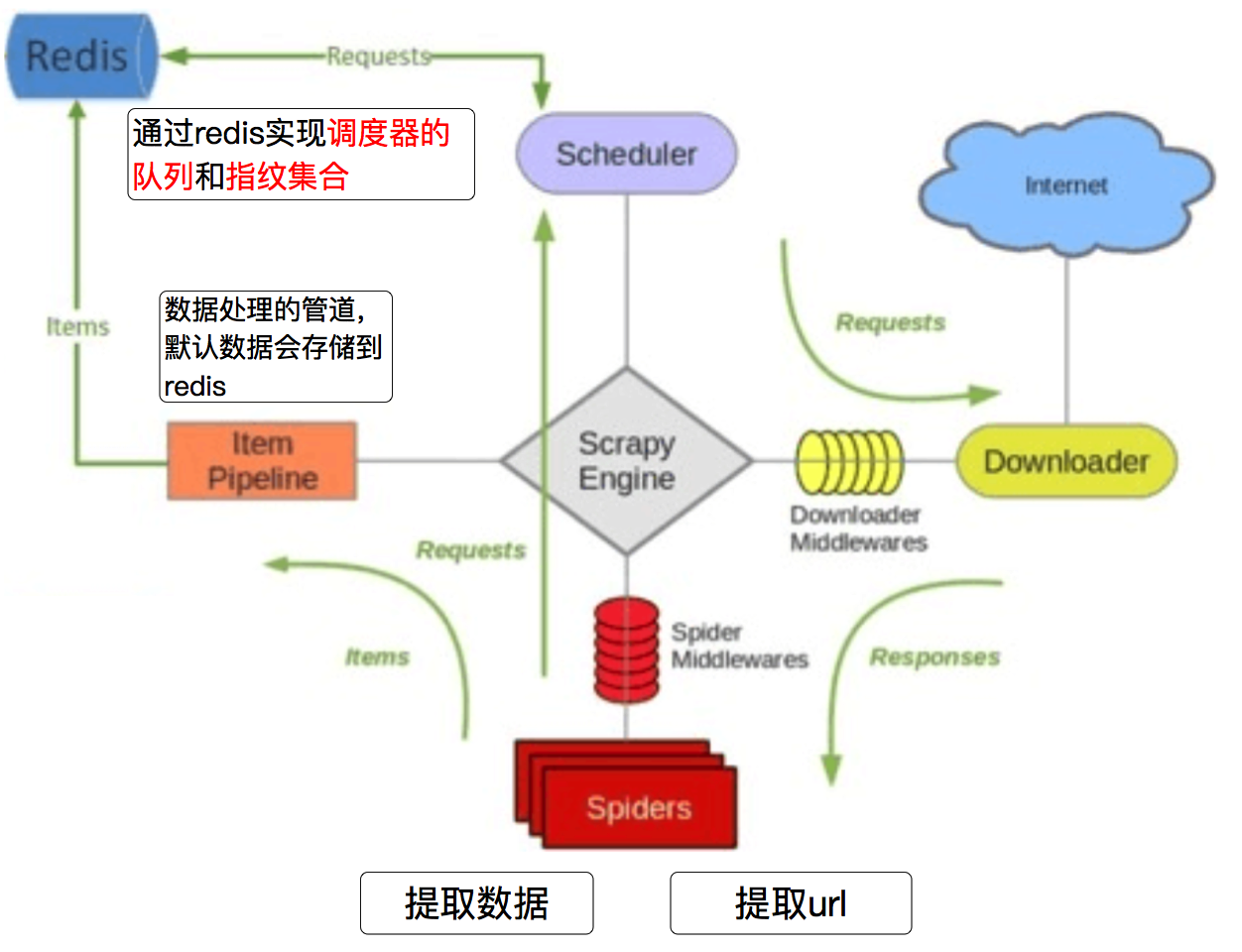

### scrapy\_redis工作流程

### scrapy_redis下载

```

clone github scrapy_redis源码文件

git clone https://github.com/rolando/scrapy-redis.git

```

### scrapy_redis中的settings文件

```

# Scrapy settings for example project

#

# For simplicity, this file contains only the most important settings by

# default. All the other settings are documented here:

#

# http://doc.scrapy.org/topics/settings.html

#

SPIDER_MODULES = ['example.spiders']

NEWSPIDER_MODULE = 'example.spiders'

USER_AGENT = 'scrapy-redis (+https://github.com/rolando/scrapy-redis)'

DUPEFILTER_CLASS = "scrapy_redis.dupefilter.RFPDupeFilter" # 指定那个去重方法给request对象去重

SCHEDULER = "scrapy_redis.scheduler.Scheduler" # 指定Scheduler队列

SCHEDULER_PERSIST = True # 队列中的内容是否持久保存,为false的时候在关闭Redis的时候,清空Redis

#SCHEDULER_QUEUE_CLASS = "scrapy_redis.queue.SpiderPriorityQueue"

#SCHEDULER_QUEUE_CLASS = "scrapy_redis.queue.SpiderQueue"

#SCHEDULER_QUEUE_CLASS = "scrapy_redis.queue.SpiderStack"

ITEM_PIPELINES = {

'example.pipelines.ExamplePipeline': 300,

'scrapy_redis.pipelines.RedisPipeline': 400, # scrapy_redis实现的items保存到redis的pipline

}

LOG_LEVEL = 'DEBUG'

# Introduce an artifical delay to make use of parallelism. to speed up the

# crawl.

DOWNLOAD_DELAY = 1

```

### scrapy_redis运行

```

allowed_domains = ['dmoztools.net']

start_urls = ['http://www.dmoztools.net/']

scrapy crawl dmoz

```

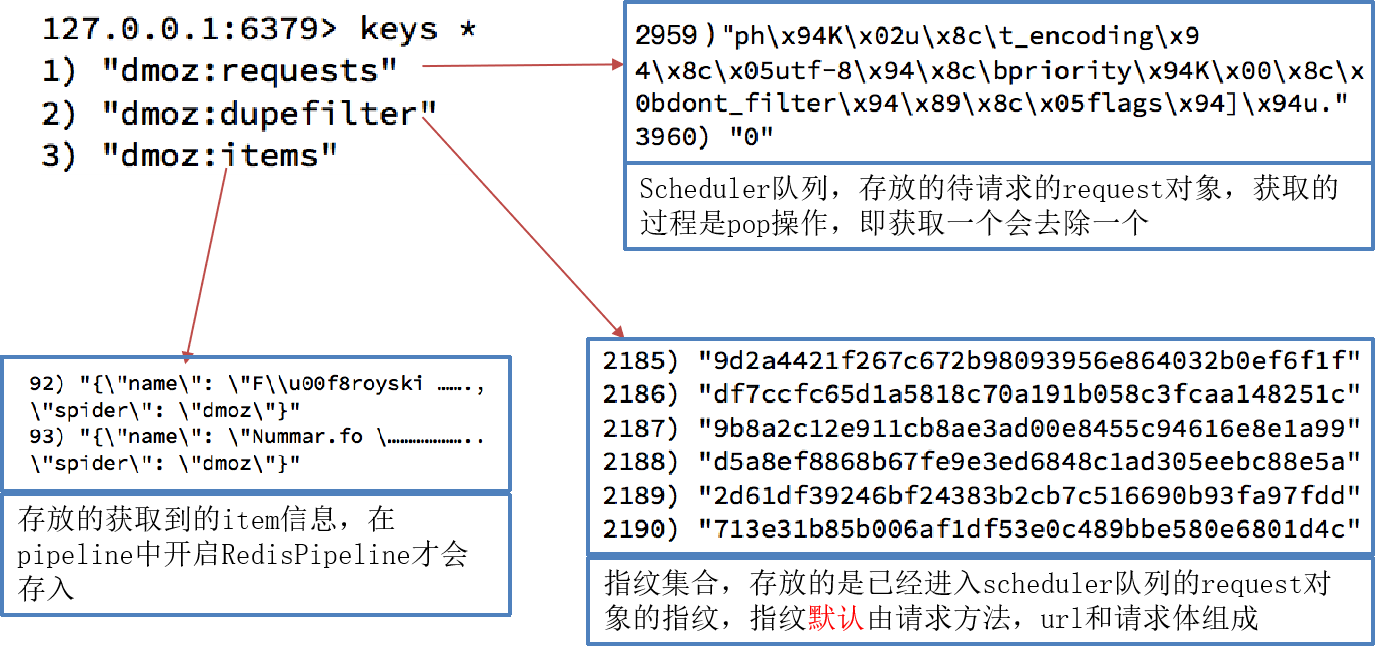

**运行结束后redis中多了三个键**

```

dmoz:requests 存放的是待爬取的requests对象

dmoz:item 爬取到的信息

dmoz:dupefilter 爬取的requests的指纹

```