[TOC]

### 环境展示

1. 查看运行的资源情况

```shell

$ kubectl -n jiaxzeng get pod,replicaset,deployment,configmap,persistentvolumeclaim

NAME READY STATUS RESTARTS AGE

pod/nginx-74b774f568-tz6wc 1/1 Running 0 15m

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-74b774f568 1 1 1 15m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx 1/1 1 1 15m

NAME DATA AGE

configmap/nginx-config 1 15m

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/nginx-rbd Bound pvc-ce942a56-8fc9-4e6e-b1fc-69b2359d2ad9 1Gi RWO csi-rbd-sc 15m

```

2. 查看pod的挂载情况

```shell

kubectl -n jiaxzeng get pod nginx-74b774f568-tz6wc -o jsonpath='{range .spec.volumes[*]}{.*}{"\n"}{end}'

config map[defaultMode:420 items:[map[key:nginx.conf path:nginx.conf]] name:nginx-config]

logs map[claimName:nginx-rbd]

default-token-qzlsc map[defaultMode:420 secretName:default-token-qzlsc]

```

3. 查看pvc是使用情况

```shell

$ kubectl -n jiaxzeng exec -it nginx-74b774f568-tz6wc -- cat /tmp/access.log

20.0.32.128 - - [11/Jul/2022:08:21:05 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0" "-"

20.0.122.128 - - [11/Jul/2022:08:21:40 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0" "-"

```

4. 查看configmap的配置内容

```shell

$ kubectl -n jiaxzeng get configmap nginx-config -o jsonpath='{.data}{"\n"}'

map[nginx.conf:user nginx;

worker_processes auto;

error_log /tmp/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /tmp/access.log main;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

#gzip on;

include /etc/nginx/conf.d/*.conf;

}]

```

### 创建备份

Velero 支持两种发现需要使用 Restic 备份的 pod 卷的方法:

1. Opt-in 方法:包含要使用 Restic 备份的卷的每个 pod 都必须使用卷的名称进行注释。

2. Opt-out 方法:使用 Restic 备份所有 pod 卷,并能够选择退出任何不应备份的卷。

> 判断 `Restic` 备份 pod 卷的方法,安装 velero 是否有带上 `--default-volumes-to-restic` 进行安装。

> 根据上一章节安装velero是选择 `Opt-in` 方法,所以这里只说明 `Opt-in` 方法。

在 `Opt-in` 方法中,Velero 将使用 Restic 备份所有 pod 卷,但以下情况除外:

- Volumes mounting the default service account token, kubernetes secrets, and config maps

- Hostpath volumes

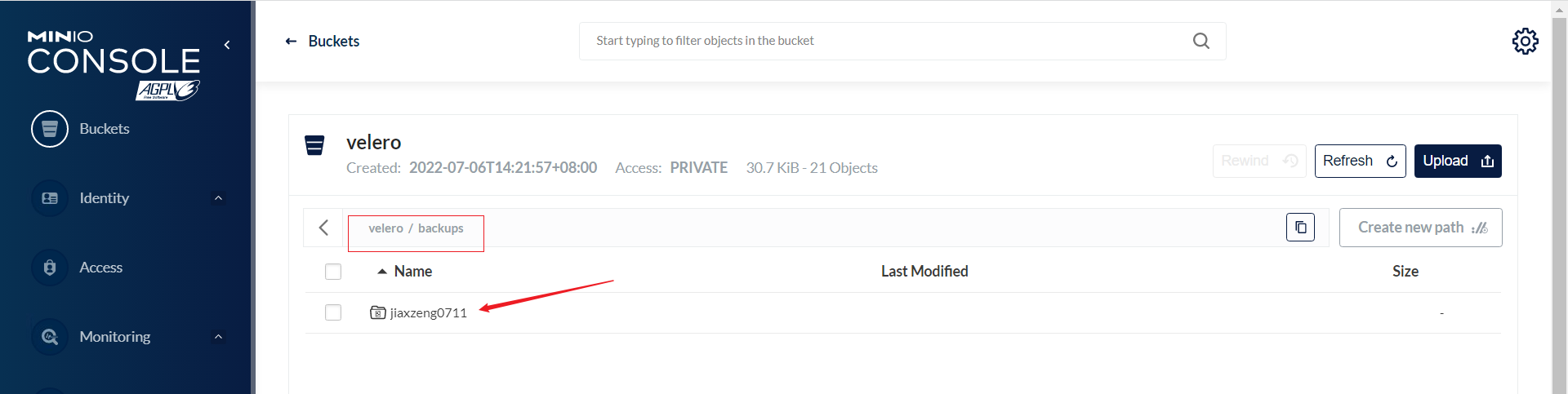

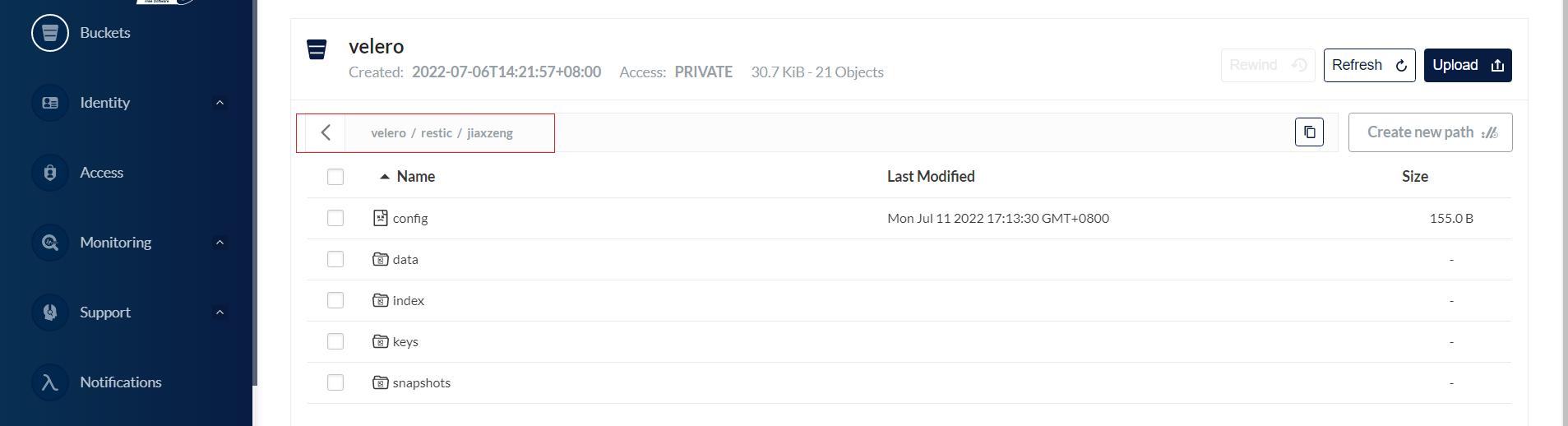

1. 备份jiaxzeng命名空间资源

```shell

$ velero create backup jiaxzeng0711 --include-namespaces jiaxzeng

Backup request "jiaxzeng0711" submitted successfully.

Run `velero backup describe jiaxzeng0711` or `velero backup logs jiaxzeng0711` for more details.

```

2. 查看备份情况

```shell

$ velero backup describe jiaxzeng0711

Name: jiaxzeng0711

Namespace: velero

Labels: velero.io/storage-location=default

Annotations: velero.io/source-cluster-k8s-gitversion=v1.18.18

velero.io/source-cluster-k8s-major-version=1

velero.io/source-cluster-k8s-minor-version=18

Phase: Completed

Errors: 0

Warnings: 0

Namespaces:

Included: jiaxzeng

Excluded: <none>

Resources:

Included: *

Excluded: <none>

Cluster-scoped: auto

Label selector: <none>

Storage Location: default

Velero-Native Snapshot PVs: auto

TTL: 720h0m0s

Hooks: <none>

Backup Format Version: 1.1.0

Started: 2022-07-11 17:13:25 +0800 CST

Completed: 2022-07-11 17:13:34 +0800 CST

Expiration: 2022-08-10 17:13:25 +0800 CST

Total items to be backed up: 20

Items backed up: 20

Velero-Native Snapshots: <none included>

Restic Backups (specify --details for more information):

Completed: 1

```

> 如果查看备份失败,请查看详细日志 ``

### 恢复服务

1. 模拟故障

```shell

$ kubectl delete ns jiaxzeng

namespace "jiaxzeng" deleted

```

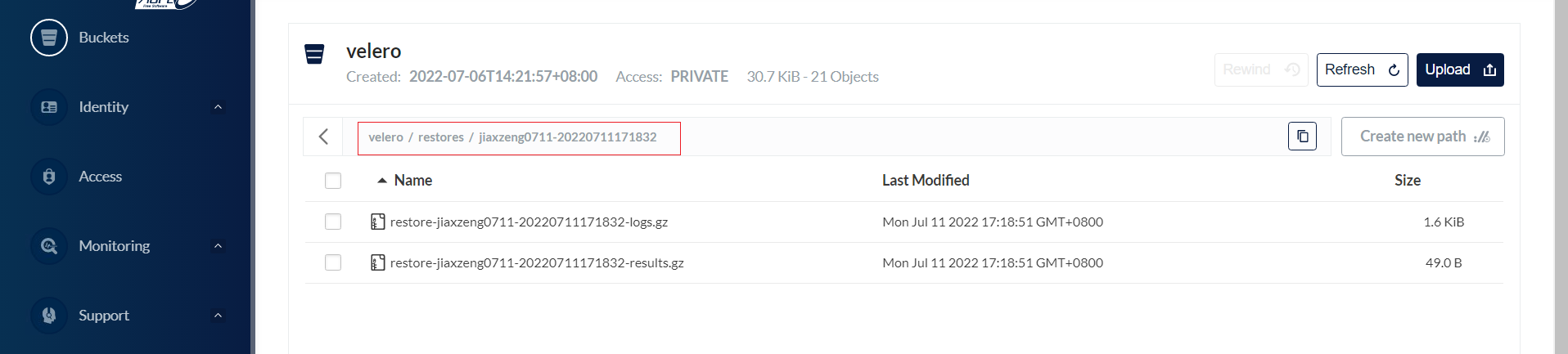

2. 恢复备份数据

```shell

$ velero create restore --from-backup jiaxzeng0711

Restore request "jiaxzeng0711-20220711171832" submitted successfully.

Run `velero restore describe jiaxzeng0711-20220711171832` or `velero restore logs jiaxzeng0711-20220711171832` for more details.

```

3. 查看恢复情况

```shell

$ velero restore describe jiaxzeng0711-20220711171832

Name: jiaxzeng0711-20220711171832

Namespace: velero

Labels: <none>

Annotations: <none>

Phase: Completed

Total items to be restored: 9

Items restored: 9

Started: 2022-07-11 17:18:32 +0800 CST

Completed: 2022-07-11 17:18:51 +0800 CST

Backup: jiaxzeng0711

Namespaces:

Included: all namespaces found in the backup

Excluded: <none>

Resources:

Included: *

Excluded: nodes, events, events.events.k8s.io, backups.velero.io, restores.velero.io, resticrepositories.velero.io

Cluster-scoped: auto

Namespace mappings: <none>

Label selector: <none>

Restore PVs: auto

Restic Restores (specify --details for more information):

Completed: 1

Preserve Service NodePorts: auto

```

4. 检查恢复是否正常

```shell

$ kubectl get ns

NAME STATUS AGE

default Active 110d

ingress-nginx Active 108d

jiaxzeng Active 44s

kube-mon Active 24d

kube-node-lease Active 110d

kube-public Active 110d

kube-storage Active 25d

kube-system Active 110d

velero Active 5d2h

$ kubectl -n jiaxzeng get pod,replicaset,deployment,configmap,persistentvolumeclaim

NAME READY STATUS RESTARTS AGE

pod/nginx-74b774f568-tz6wc 1/1 Running 0 72s

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-74b774f568 1 1 1 72s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx 1/1 1 1 72s

NAME DATA AGE

configmap/nginx-config 1 73s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/nginx-rbd Bound pvc-cf97efd9-e71a-42b9-aca5-ac47402c6f93 1Gi RWO csi-rbd-sc 73s

$ kubectl -n jiaxzeng get pod nginx-74b774f568-tz6wc -o jsonpath='{range .spec.volumes[*]}{.*}{"\n"}'

config map[defaultMode:420 items:[map[key:nginx.conf path:nginx.conf]] name:nginx-config]

logs map[claimName:nginx-rbd]

map[defaultMode:420 secretName:default-token-qzlsc] default-token-qzlsc

$ kubectl -n jiaxzeng get configmap nginx-config -o jsonpath='{.data}{"\n"}'

map[nginx.conf:user nginx;

worker_processes auto;

error_log /tmp/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /tmp/access.log main;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

#gzip on;

include /etc/nginx/conf.d/*.conf;

}]

```

- 前言

- 架构

- 部署

- kubeadm部署

- kubeadm扩容节点

- 二进制安装基础组件

- 添加master节点

- 添加工作节点

- 选装插件安装

- Kubernetes使用

- k8s与dockerfile启动参数

- hostPort与hostNetwork异同

- 应用上下线最佳实践

- 进入容器命名空间

- 主机与pod之间拷贝

- events排序问题

- k8s会话保持

- 容器root特权

- CNI插件

- calico

- calicoctl安装

- calico网络通信

- calico更改pod地址范围

- 新增节点网卡名不一致

- 修改calico模式

- calico数据存储迁移

- 启用 kubectl 来管理 Calico

- calico卸载

- cilium

- cilium架构

- cilium/hubble安装

- cilium网络路由

- IP地址管理(IPAM)

- Cilium替换KubeProxy

- NodePort运行DSR模式

- IP地址伪装

- ingress使用

- nginx-ingress

- ingress安装

- ingress高可用

- helm方式安装

- 基本使用

- Rewrite配置

- tls安全路由

- ingress发布管理

- 代理k8s集群外的web应用

- ingress自定义日志

- ingress记录真实IP地址

- 自定义参数

- traefik-ingress

- traefik名词概念

- traefik安装

- traefik初次使用

- traefik路由(IngressRoute)

- traefik中间件(middlewares)

- traefik记录真实IP地址

- cert-manager

- 安装教程

- 颁布者CA

- 创建证书

- 外部存储

- 对接NFS

- 对接ceph-rbd

- 对接cephfs

- 监控平台

- Prometheus

- Prometheus安装

- grafana安装

- Prometheus配置文件

- node_exporter安装

- kube-state-metrics安装

- Prometheus黑盒监控

- Prometheus告警

- grafana仪表盘设置

- 常用监控配置文件

- thanos

- Prometheus

- Sidecar组件

- Store Gateway组件

- Querier组件

- Compactor组件

- Prometheus监控项

- grafana

- Querier对接grafana

- alertmanager

- Prometheus对接alertmanager

- 日志中心

- filebeat安装

- kafka安装

- logstash安装

- elasticsearch安装

- elasticsearch索引生命周期管理

- kibana安装

- event事件收集

- 资源预留

- 节点资源预留

- imagefs与nodefs验证

- 资源预留 vs 驱逐 vs OOM

- scheduler调度原理

- Helm

- Helm安装

- Helm基本使用

- 安全

- apiserver审计日志

- RBAC鉴权

- namespace资源限制

- 加密Secret数据

- 服务网格

- 备份恢复

- Velero安装

- 备份与恢复

- 常用维护操作

- container runtime

- 拉取私有仓库镜像配置

- 拉取公网镜像加速配置

- runtime网络代理

- overlay2目录占用过大

- 更改Docker的数据目录

- Harbor

- 重置Harbor密码

- 问题处理

- 关闭或开启Harbor的认证

- 固定harbor的IP地址范围

- ETCD

- ETCD扩缩容

- ETCD常用命令

- ETCD数据空间压缩清理

- ingress

- ingress-nginx header配置

- kubernetes

- 验证yaml合法性

- 切换KubeProxy模式

- 容器解析域名

- 删除节点

- 修改镜像仓库

- 修改node名称

- 升级k8s集群

- 切换容器运行时

- apiserver接口

- 其他

- 升级内核

- k8s组件性能分析

- ETCD

- calico

- calico健康检查失败

- Harbor

- harbor同步失败

- Kubernetes

- 资源Terminating状态

- 启动容器报错