[TOC]

# 小知识点

- proxy_arp: 原理就是当出现跨网段的ARP请求时,路由器将自己的MAC返回给发送ARP广播请求发送者,实现MAC地址代理(善意的欺骗),最终使得主机能够通信。 0为不开启,1则开启

> 开启了proxy_arp(/proc/sys/net/ipv4/conf/[网卡名称]/proxy_arp) 的情况下。如果请求中的ip地址不是本机网卡接口的地址,且有该地址的路由,则会以自己的mac地址进行回复;如果没有该地址的路由,不回复。

- 确认容器与宿主机一对veth-pair

1. 登录容器 `cat /sys/class/net/eth0/iflink` 查看另一个veth设备在宿主机哪个编号

2. 在宿主机 `ip r | grep [容器IP地址]`

- IPIP协议对应IP协议4

- tcpdump 抓包: `tcpdump 'ip proto 4'`

- Wireshark 过滤 `ip.proto == 4`

# 同节点通信

## 两个pod背景信息

两个pod分布情况

```shell

$ kubectl get pod -l app=fileserver -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

fileserver-7cb9d7d4d-h99sp 1/1 Running 0 14m 172.26.40.147 192.168.32.127 <none> <none>

fileserver-7cb9d7d4d-mssdr 1/1 Running 0 14m 172.26.40.146 192.168.32.127 <none> <none>

```

`fileserver-7cb9d7d4d-mssdr` 容器的信息

```shell

# IP地址信息

$ kubectl exec -it fileserver-7cb9d7d4d-mssdr -- ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

4: eth0@if11: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1480 qdisc noqueue state UP

link/ether 26:05:c7:19:a8:cf brd ff:ff:ff:ff:ff:ff

inet 172.26.40.146/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::2405:c7ff:fe19:a8cf/64 scope link

valid_lft forever preferred_lft forever

# 路由信息

$ kubectl exec -it fileserver-7cb9d7d4d-mssdr -- ip r

default via 169.254.1.1 dev eth0

169.254.1.1 dev eth0 scope link

# veth-pair对在宿主机网卡名称

$ ip r | grep 172.26.40.146

172.26.40.146 dev calie64b9fa939d scope link

```

`fileserver-7cb9d7d4d-h99sp` 容器的信息

```shell

# IP地址信息

$ kubectl exec -it fileserver-7cb9d7d4d-h99sp -- ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

4: eth0@if12: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1480 qdisc noqueue state UP

link/ether 7a:3a:28:54:4e:03 brd ff:ff:ff:ff:ff:ff

inet 172.26.40.147/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::783a:28ff:fe54:4e03/64 scope link

valid_lft forever preferred_lft forever

# 路由信息

$ kubectl exec -it fileserver-7cb9d7d4d-h99sp -- ip r

default via 169.254.1.1 dev eth0

169.254.1.1 dev eth0 scope link

# veth-pair对在宿主机网卡名称

$ ip r | grep 172.26.40.147

172.26.40.147 dev calic40aae79714 scope link

```

## IPIP

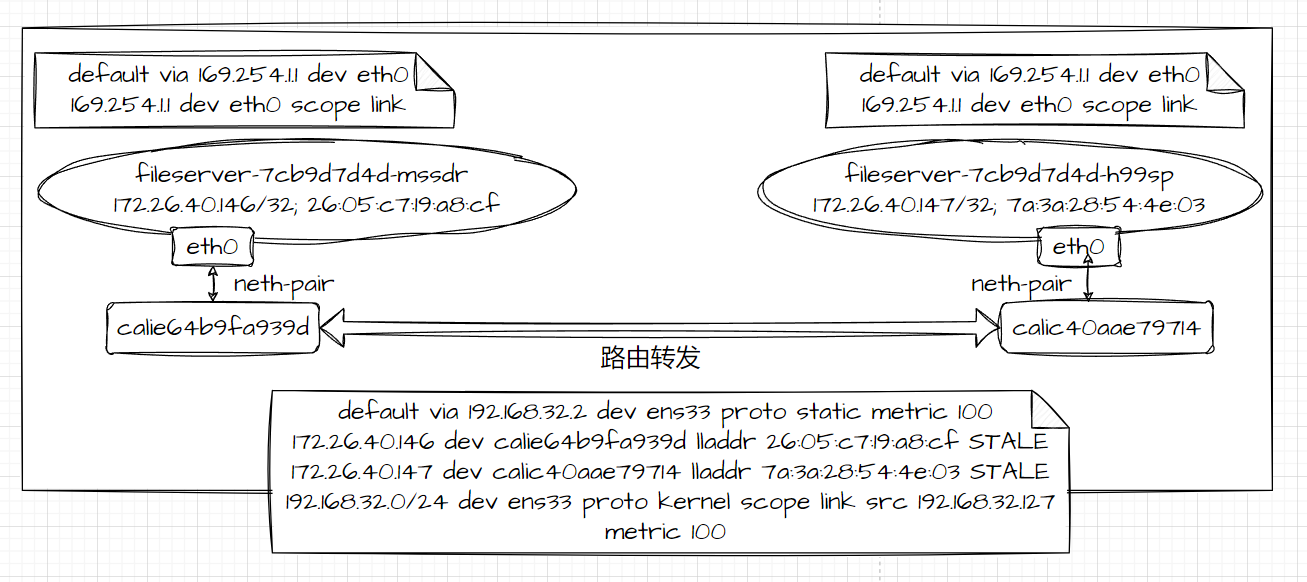

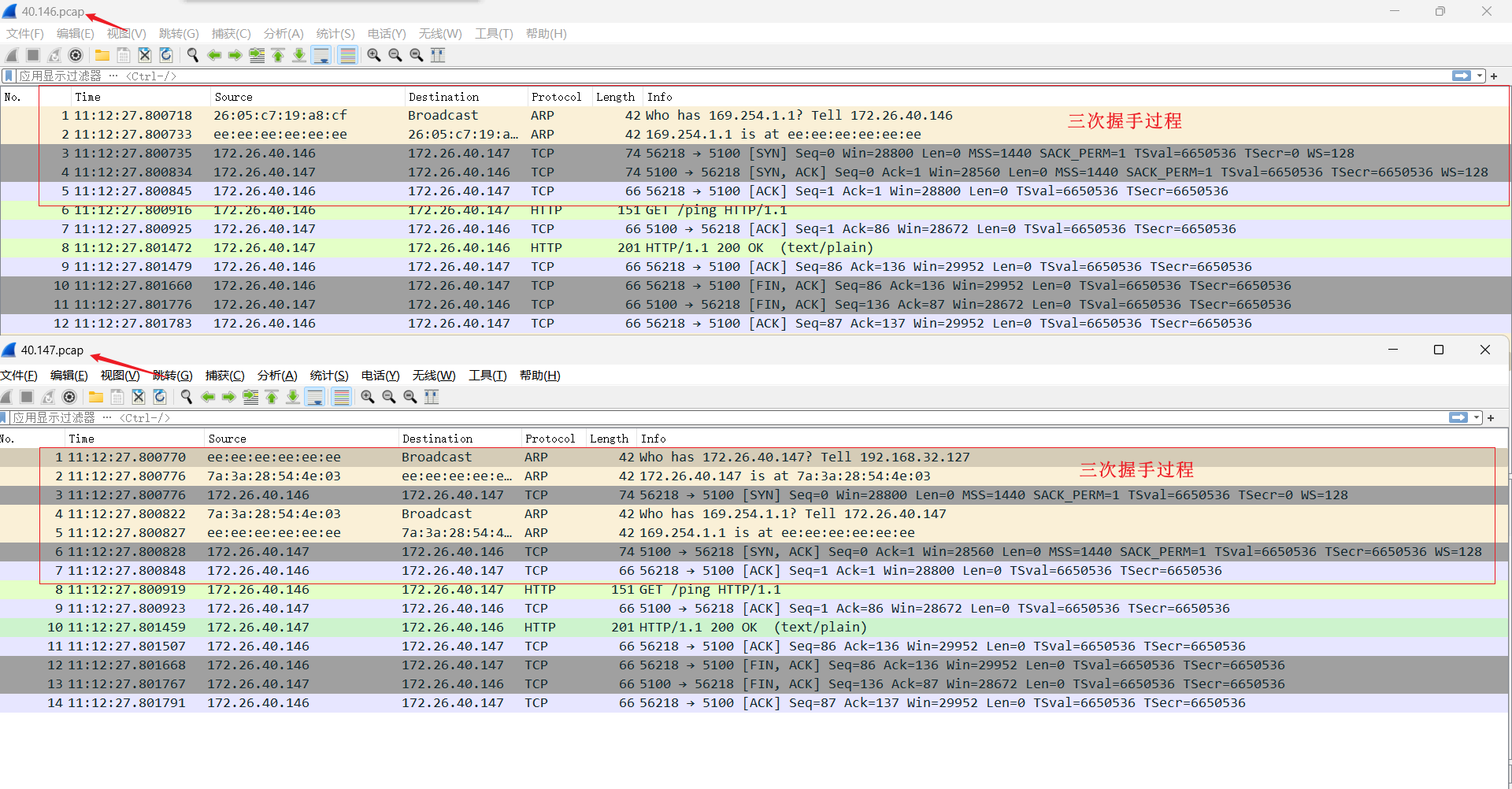

从 `fileserver-7cb9d7d4d-mssdr` 到 `fileserver-7cb9d7d4d-h99sp` 两个pod在同节点上,数据包流程图

抓包验证

```shell

tcpdump -i calie64b9fa939d -penn

tcpdump -i calic40aae79714 -penn

```

## BGP

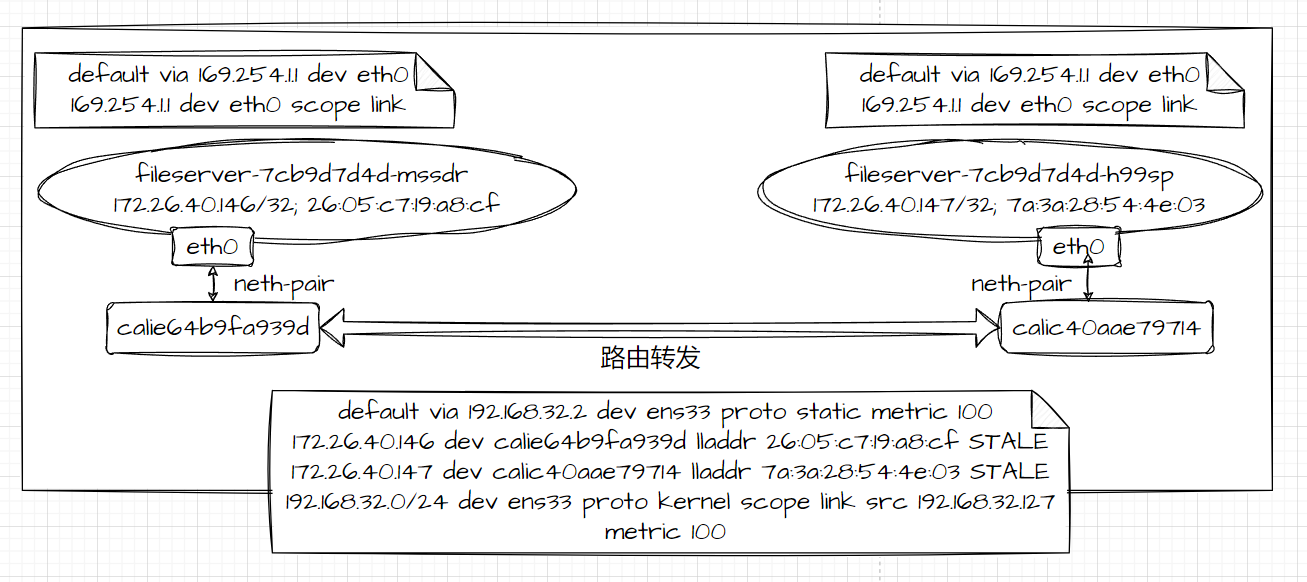

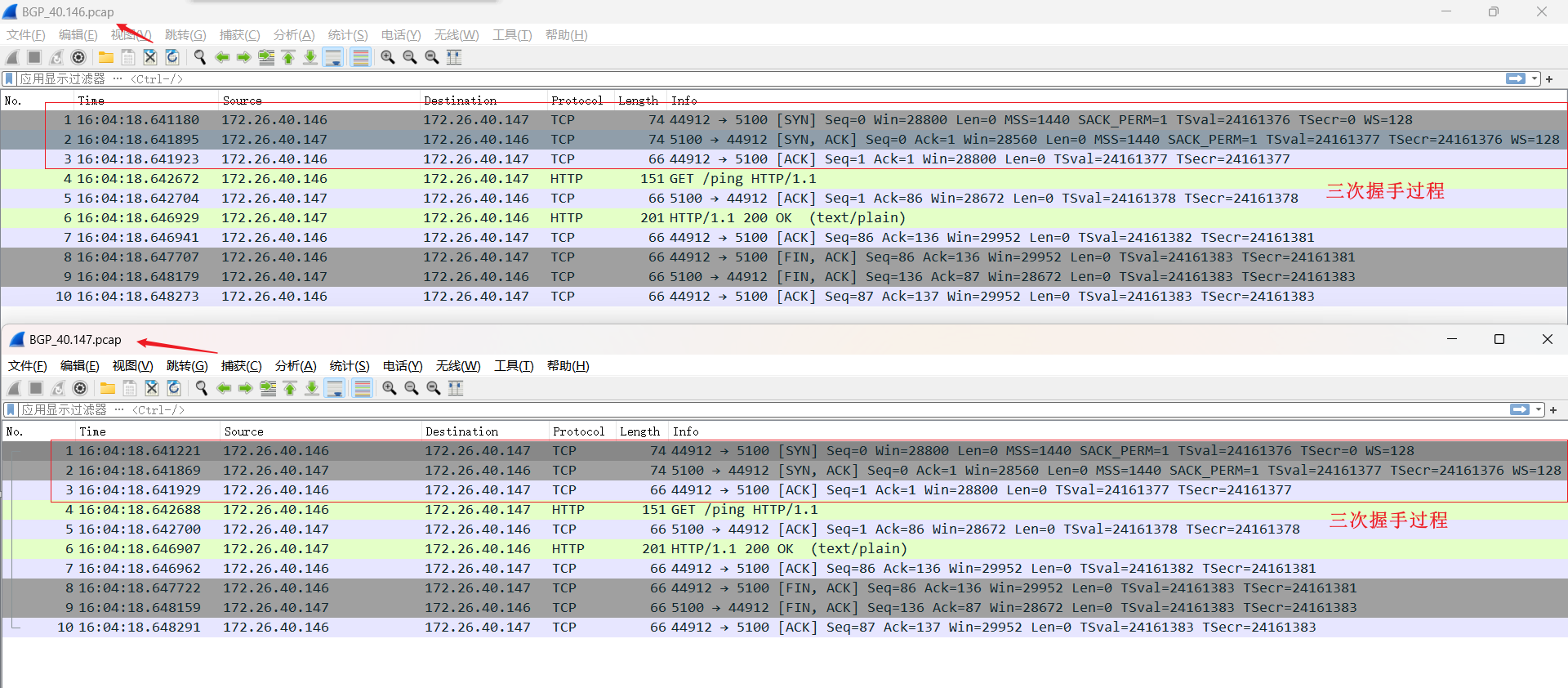

从 `fileserver-7cb9d7d4d-mssdr` 到 `fileserver-7cb9d7d4d-h99sp` 两个pod在同节点上,数据包流程图

抓包验证

```shell

tcpdump -i calie64b9fa939d -penn

tcpdump -i calic40aae79714 -penn

```

> 三次握手详细过程与IPIP是一致的,下面截图就是抓包的数据。因为pod与宿主机在做IPIP协议的时候,已经arp表已经有缓存了。所以少一些arp广报播文

# 跨节点通信

## 两个pod背景信息

两个pod分布情况

```shell

$ kubectl get pod -owide -l app=fileserver

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

fileserver-595ccd77dd-hh8c7 1/1 Running 0 7s 172.26.40.161 192.168.32.127 <none> <none>

fileserver-595ccd77dd-k9bzv 1/1 Running 0 8s 172.26.122.151 192.168.32.128 <none> <none>

```

`fileserver-595ccd77dd-hh8c7` 容器的信息

```shell

# IP地址信息

$ kubectl exec -it fileserver-595ccd77dd-hh8c7 -- ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

4: eth0@if12: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP

link/ether 82:de:a5:aa:e4:41 brd ff:ff:ff:ff:ff:ff

inet 172.26.40.161/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::80de:a5ff:feaa:e441/64 scope link

valid_lft forever preferred_lft forever

# 路由信息

$ kubectl exec -it fileserver-595ccd77dd-hh8c7 -- ip r

default via 169.254.1.1 dev eth0

169.254.1.1 dev eth0 scope link

# veth-pair对在宿主机网卡名称

$ ip r | grep 172.26.40.150

172.26.40.161 dev cali5e8dd2e9d68 scope link

```

`fileserver-595ccd77dd-k9bzv` 容器的信息

```shell

# IP地址信息

$ kubectl exec -it fileserver-595ccd77dd-k9bzv -- ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

4: eth0@if11: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP

link/ether 12:ec:00:00:e6:71 brd ff:ff:ff:ff:ff:ff

inet 172.26.122.151/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::10ec:ff:fe00:e671/64 scope link

valid_lft forever preferred_lft forever

# 路由信息

$ kubectl exec -it fileserver-595ccd77dd-k9bzv -- ip r

default via 169.254.1.1 dev eth0

169.254.1.1 dev eth0 scope link

# veth-pair对在宿主机网卡名称

$ ip r | grep 172.26.122.141

172.26.122.151 dev cali7b1def0e886 scope link

```

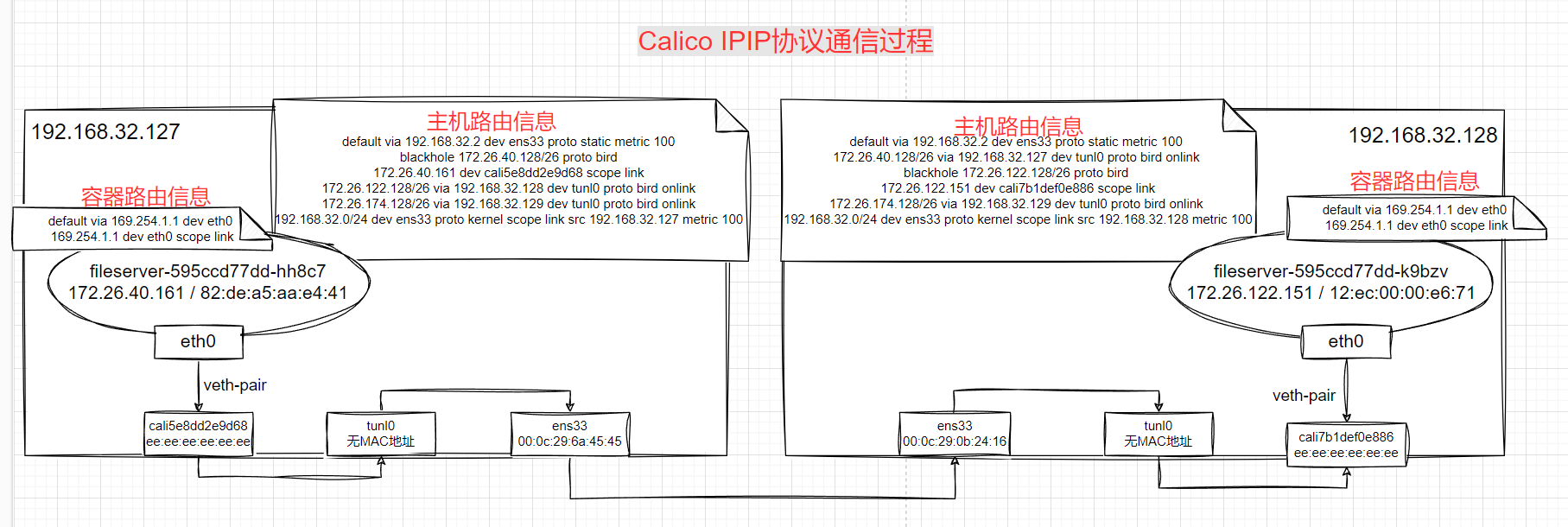

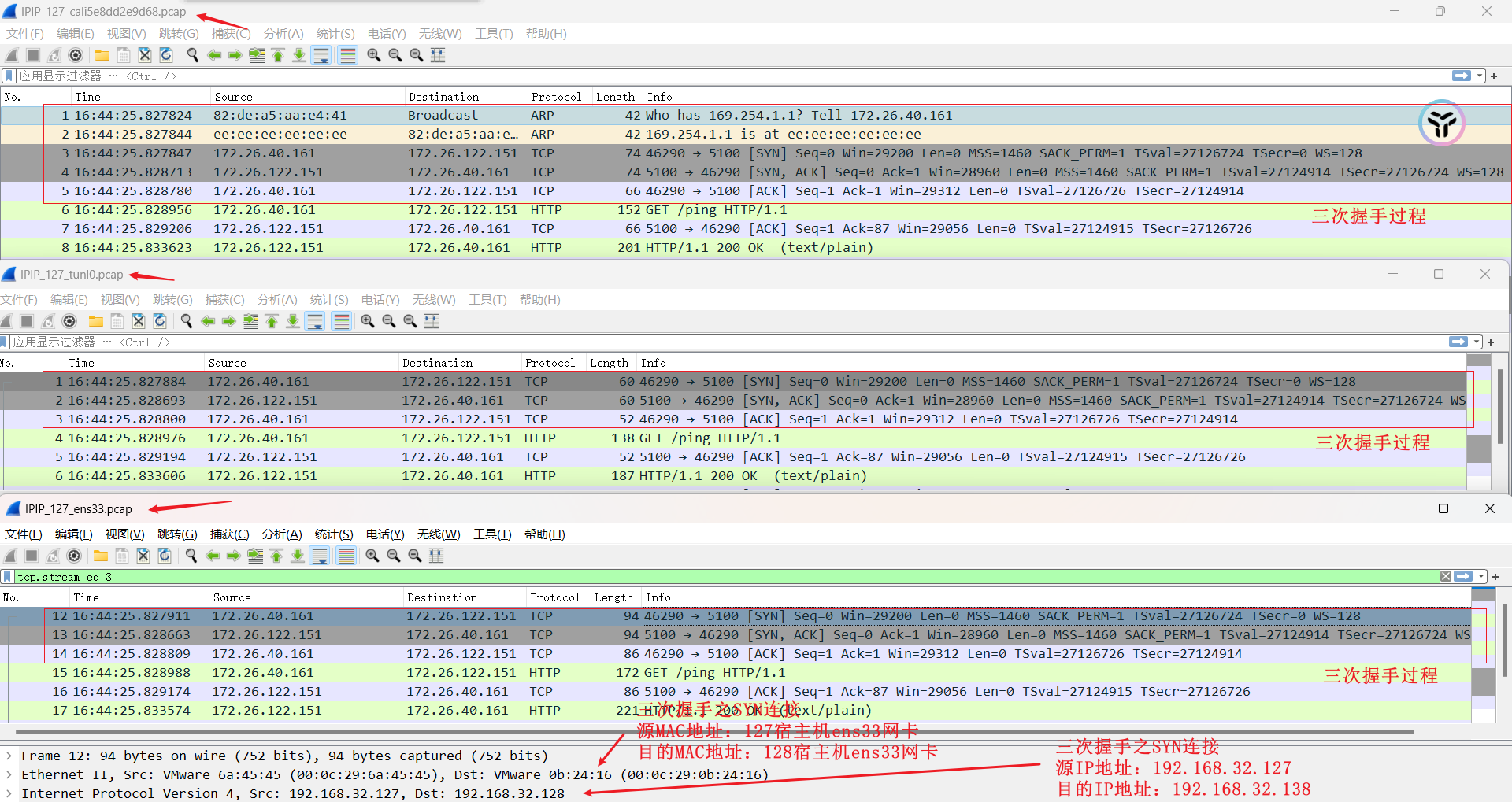

## IPIP

从 `fileserver-595ccd77dd-hh8c7` 到 `fileserver-595ccd77dd-k9bzv` 两个pod在跨节点上,数据包流程图

抓包验证

```shell

# 192.168.32.127 主机抓包

tcpdump -i ens33 -penn host 192.168.32.128 and 'ip proto 4'

tcpdump -i tunl0 -penn host 172.26.122.151

tcpdump -i cali5e8dd2e9d68 -penn

# 192.168.32.128 主机抓包

tcpdump -i ens33 -penn host 192.168.32.127 and 'ip proto 4'

tcpdump -i tunl0 -penn host 172.26.40.161

tcpdump -i cali7b1def0e886 -penn

```

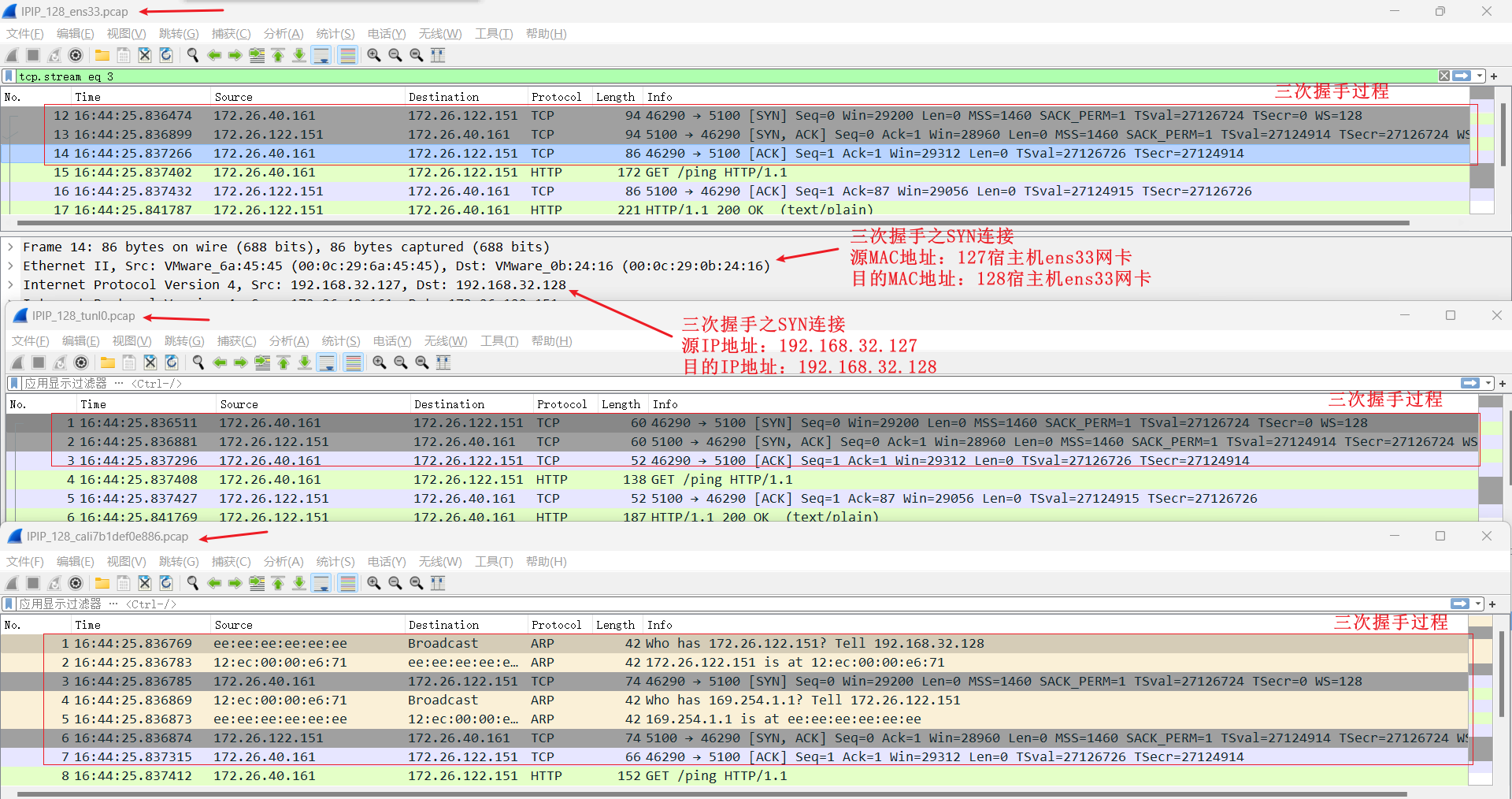

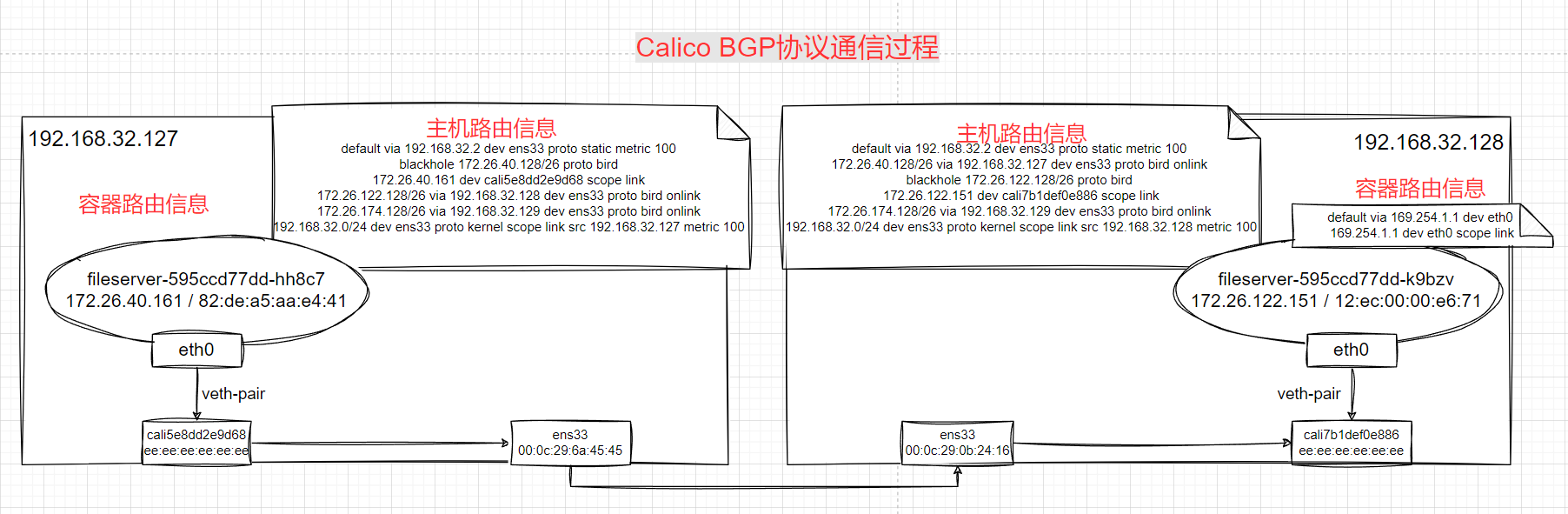

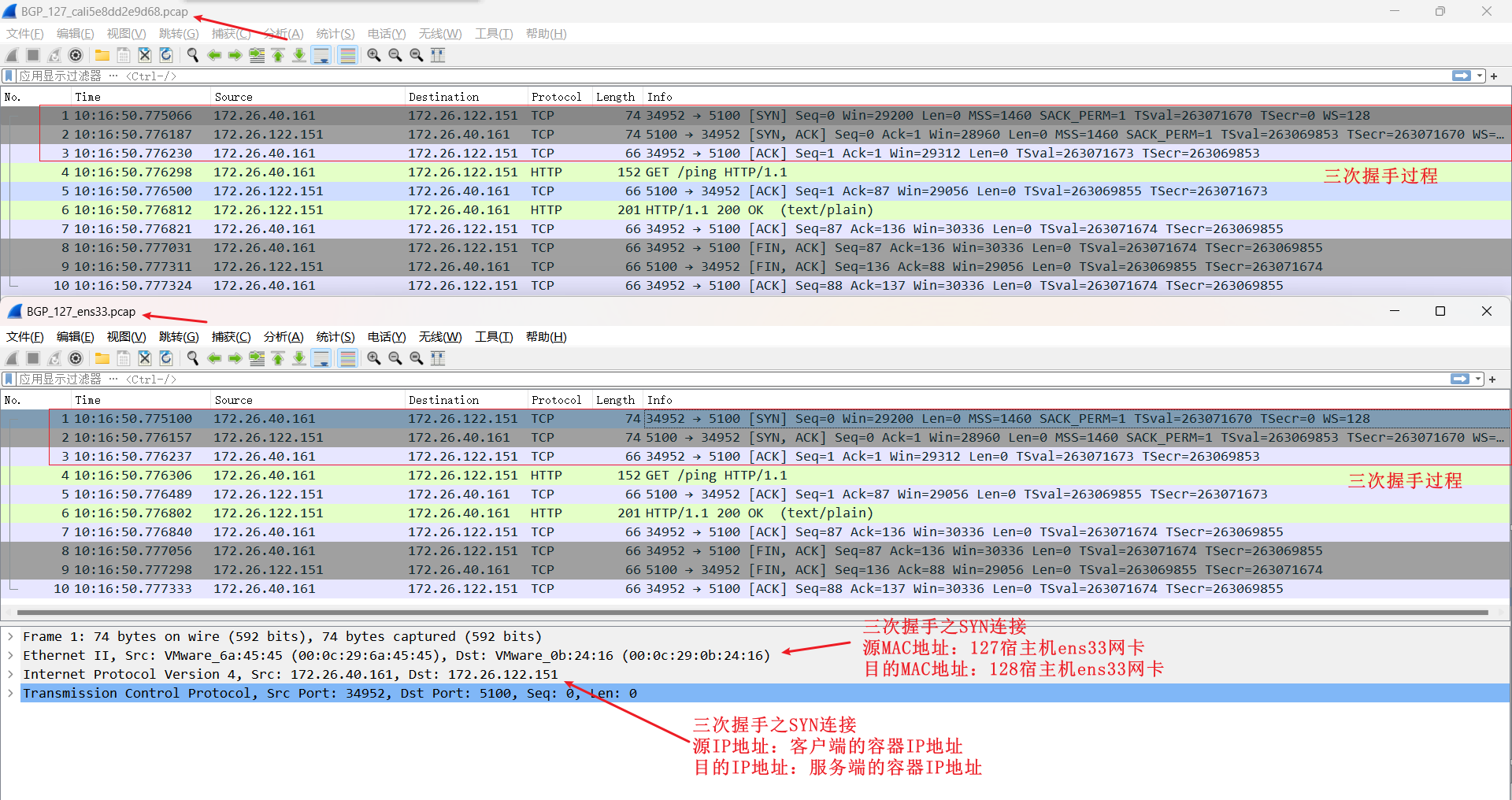

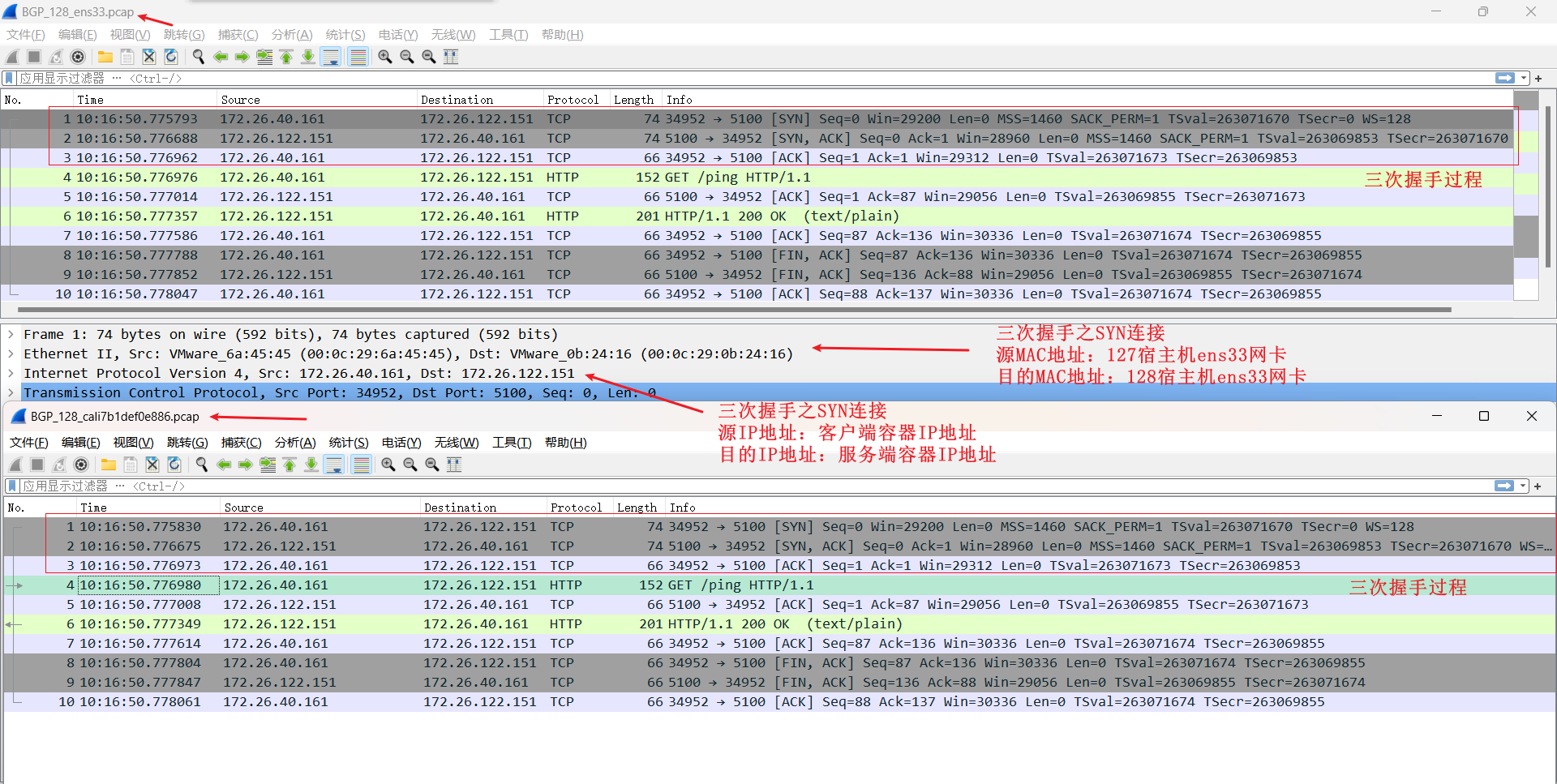

## BGP

从 `fileserver-595ccd77dd-hh8c7` 到 `fileserver-595ccd77dd-k9bzv` 两个pod在跨节点上,数据包流程图

抓包验证

```shell

# 192.168.32.127 主机抓包

tcpdump -i ens33 -penn host 172.26.122.151

tcpdump -i cali5e8dd2e9d68 -penn

# 192.168.32.128 主机抓包

tcpdump -i ens33 -penn host 172.26.40.161

tcpdump -i cali7b1def0e886 -penn

```

# 总结

- 同节点:无论是IPIP,BGP协议封装,网络通信过程都是一样的。查宿主机路由表转发请求

- 跨节点

- IPIP封装:`tunl0` 网卡有数据包通过且封装数据包(宿主机IP);宿主机网卡抓到 **数据包网络层** 是两层的(第一层源宿主机,目的宿主机;第二层源容器,目的容器);**数据包数据链路层** 是分别是源宿主机与目的宿主机MAC地址;

- BGP封装:数据包不经过 `tunl0` 网卡;宿主机网卡抓到 **数据包网络层** 分别是客户端容器IP地址与服务端容器IP地址;**数据包数据链路层** 是分别是源宿主机与目的宿主机MAC地址;

- 从抓包层面来看:只有网络层有区别,IPIP协议多一层宿主机之间的IP地址封装,而BGP协议是没有的

- 前言

- 架构

- 部署

- kubeadm部署

- kubeadm扩容节点

- 二进制安装基础组件

- 添加master节点

- 添加工作节点

- 选装插件安装

- Kubernetes使用

- k8s与dockerfile启动参数

- hostPort与hostNetwork异同

- 应用上下线最佳实践

- 进入容器命名空间

- 主机与pod之间拷贝

- events排序问题

- k8s会话保持

- 容器root特权

- CNI插件

- calico

- calicoctl安装

- calico网络通信

- calico更改pod地址范围

- 新增节点网卡名不一致

- 修改calico模式

- calico数据存储迁移

- 启用 kubectl 来管理 Calico

- calico卸载

- cilium

- cilium架构

- cilium/hubble安装

- cilium网络路由

- IP地址管理(IPAM)

- Cilium替换KubeProxy

- NodePort运行DSR模式

- IP地址伪装

- ingress使用

- nginx-ingress

- ingress安装

- ingress高可用

- helm方式安装

- 基本使用

- Rewrite配置

- tls安全路由

- ingress发布管理

- 代理k8s集群外的web应用

- ingress自定义日志

- ingress记录真实IP地址

- 自定义参数

- traefik-ingress

- traefik名词概念

- traefik安装

- traefik初次使用

- traefik路由(IngressRoute)

- traefik中间件(middlewares)

- traefik记录真实IP地址

- cert-manager

- 安装教程

- 颁布者CA

- 创建证书

- 外部存储

- 对接NFS

- 对接ceph-rbd

- 对接cephfs

- 监控平台

- Prometheus

- Prometheus安装

- grafana安装

- Prometheus配置文件

- node_exporter安装

- kube-state-metrics安装

- Prometheus黑盒监控

- Prometheus告警

- grafana仪表盘设置

- 常用监控配置文件

- thanos

- Prometheus

- Sidecar组件

- Store Gateway组件

- Querier组件

- Compactor组件

- Prometheus监控项

- grafana

- Querier对接grafana

- alertmanager

- Prometheus对接alertmanager

- 日志中心

- filebeat安装

- kafka安装

- logstash安装

- elasticsearch安装

- elasticsearch索引生命周期管理

- kibana安装

- event事件收集

- 资源预留

- 节点资源预留

- imagefs与nodefs验证

- 资源预留 vs 驱逐 vs OOM

- scheduler调度原理

- Helm

- Helm安装

- Helm基本使用

- 安全

- apiserver审计日志

- RBAC鉴权

- namespace资源限制

- 加密Secret数据

- 服务网格

- 备份恢复

- Velero安装

- 备份与恢复

- 常用维护操作

- container runtime

- 拉取私有仓库镜像配置

- 拉取公网镜像加速配置

- runtime网络代理

- overlay2目录占用过大

- 更改Docker的数据目录

- Harbor

- 重置Harbor密码

- 问题处理

- 关闭或开启Harbor的认证

- 固定harbor的IP地址范围

- ETCD

- ETCD扩缩容

- ETCD常用命令

- ETCD数据空间压缩清理

- ingress

- ingress-nginx header配置

- kubernetes

- 验证yaml合法性

- 切换KubeProxy模式

- 容器解析域名

- 删除节点

- 修改镜像仓库

- 修改node名称

- 升级k8s集群

- 切换容器运行时

- apiserver接口

- 其他

- 升级内核

- k8s组件性能分析

- ETCD

- calico

- calico健康检查失败

- Harbor

- harbor同步失败

- Kubernetes

- 资源Terminating状态

- 启动容器报错