## 一、下载软件

[https://www.elastic.co/cn/downloads/logstash](https://www.elastic.co/cn/downloads/logstash)

## 二、安装部署

### 2.1. 准备patterns文件

到logstash的目录下,创建`patterns`目录

~~~

mkdir patterns

~~~

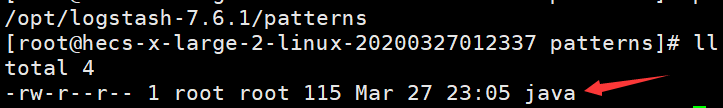

在`patterns`目录里新建一个`java`的patterns文件

内容如下:

~~~

# user-center

MYAPPNAME [0-9a-zA-Z_-]*

# RMI TCP Connection(2)-127.0.0.1

MYTHREADNAME ([0-9a-zA-Z._-]|\(|\)|\s)*

~~~

> 就是一个名字叫做**java**的文件,不需要文件后缀

### 2.2. 创建配置文件

到logstash的config目录下创建`logstash.conf`文件

> 需要修改以下地方

>

> 1. filter 块中的`patterns_dir`路径

> 2. output 块中的密码

**vim logstash.conf**

~~~

input {

beats {

port => 5044

}

}

filter {

if [type] == "syslog" {

grok {

match => { "message" => "%{SYSLOGTIMESTAMP:syslog_timestamp} %{SYSLOGHOST:syslog_hostname} %{DATA:syslog_program}(?:\[%{POSINT:syslog_pid}\])?: %{GREEDYDATA:syslog_message}" }

add_field => [ "received_at", "%{@timestamp}" ]

add_field => [ "received_from", "%{host}" ]

}

syslog_pri { }

date {

match => [ "syslog_timestamp", "MMM d HH:mm:ss", "MMM dd HH:mm:ss" ]

}

}

if [fields][docType] == "sys-log" {

grok {

patterns_dir => ["/opt/logstash-7.6.1/patterns"]

match => { "message" => "\[%{NOTSPACE:appName}:%{IP:serverIp}:%{NOTSPACE:serverPort}\] %{TIMESTAMP_ISO8601:logTime} %{LOGLEVEL:logLevel} %{WORD:pid} \[%{MYAPPNAME:traceId},%{MYAPPNAME:spanId},%{MYAPPNAME:parentId}\] \[%{MYTHREADNAME:threadName}\] %{NOTSPACE:classname} %{GREEDYDATA:message}" }

overwrite => ["message"]

}

date {

match => ["logTime","yyyy-MM-dd HH:mm:ss.SSS Z"]

}

date {

match => ["logTime","yyyy-MM-dd HH:mm:ss.SSS"]

target => "timestamp"

locale => "en"

timezone => "+08:00"

}

mutate {

remove_field => "logTime"

remove_field => "@version"

remove_field => "host"

remove_field => "offset"

}

}

if [fields][docType] == "point-log" {

grok {

patterns_dir => ["/opt/logstash-7.6.1/patterns"]

match => {

"message" => "%{TIMESTAMP_ISO8601:logTime}\|%{MYAPPNAME:appName}\|%{WORD:resouceid}\|%{MYAPPNAME:type}\|%{GREEDYDATA:object}"

}

}

kv {

source => "object"

field_split => "&"

value_split => "="

}

date {

match => ["logTime","yyyy-MM-dd HH:mm:ss.SSS Z"]

}

date {

match => ["logTime","yyyy-MM-dd HH:mm:ss.SSS"]

target => "timestamp"

locale => "en"

timezone => "+08:00"

}

mutate {

remove_field => "message"

remove_field => "logTime"

remove_field => "@version"

remove_field => "host"

remove_field => "offset"

}

}

if [fields][docType] == "mysqlslowlogs" {

grok {

match => [

"message", "^#\s+User@Host:\s+%{USER:user}\[[^\]]+\]\s+@\s+(?:(?<clienthost>\S*) )?\[(?:%{IP:clientip})?\]\s+Id:\s+%{NUMBER:id}\n# Query_time: %{NUMBER:query_time}\s+Lock_time: %{NUMBER:lock_time}\s+Rows_sent: %{NUMBER:rows_sent}\s+Rows_examined: %{NUMBER:rows_examined}\nuse\s(?<dbname>\w+);\nSET\s+timestamp=%{NUMBER:timestamp_mysql};\n(?<query_str>[\s\S]*)",

"message", "^#\s+User@Host:\s+%{USER:user}\[[^\]]+\]\s+@\s+(?:(?<clienthost>\S*) )?\[(?:%{IP:clientip})?\]\s+Id:\s+%{NUMBER:id}\n# Query_time: %{NUMBER:query_time}\s+Lock_time: %{NUMBER:lock_time}\s+Rows_sent: %{NUMBER:rows_sent}\s+Rows_examined: %{NUMBER:rows_examined}\nSET\s+timestamp=%{NUMBER:timestamp_mysql};\n(?<query_str>[\s\S]*)",

"message", "^#\s+User@Host:\s+%{USER:user}\[[^\]]+\]\s+@\s+(?:(?<clienthost>\S*) )?\[(?:%{IP:clientip})?\]\n# Query_time: %{NUMBER:query_time}\s+Lock_time: %{NUMBER:lock_time}\s+Rows_sent: %{NUMBER:rows_sent}\s+Rows_examined: %{NUMBER:rows_examined}\nuse\s(?<dbname>\w+);\nSET\s+timestamp=%{NUMBER:timestamp_mysql};\n(?<query_str>[\s\S]*)",

"message", "^#\s+User@Host:\s+%{USER:user}\[[^\]]+\]\s+@\s+(?:(?<clienthost>\S*) )?\[(?:%{IP:clientip})?\]\n# Query_time: %{NUMBER:query_time}\s+Lock_time: %{NUMBER:lock_time}\s+Rows_sent: %{NUMBER:rows_sent}\s+Rows_examined: %{NUMBER:rows_examined}\nSET\s+timestamp=%{NUMBER:timestamp_mysql};\n(?<query_str>[\s\S]*)"

]

}

date {

match => ["timestamp_mysql","yyyy-MM-dd HH:mm:ss.SSS","UNIX"]

}

date {

match => ["timestamp_mysql","yyyy-MM-dd HH:mm:ss.SSS","UNIX"]

target => "timestamp"

}

mutate {

convert => ["query_time", "float"]

convert => ["lock_time", "float"]

convert => ["rows_sent", "integer"]

convert => ["rows_examined", "integer"]

remove_field => "message"

remove_field => "timestamp_mysql"

remove_field => "@version"

}

}

}

output {

if [fields][docType] == "sys-log" {

elasticsearch {

hosts => ["http://localhost:9200"]

user => "elastic"

password => "qEnNfKNujqNrOPD9q5kb"

index => "sys-log-%{+YYYY.MM.dd}"

}

}

if [fields][docType] == "point-log" {

elasticsearch {

hosts => ["http://localhost:9200"]

user => "elastic"

password => "qEnNfKNujqNrOPD9q5kb"

index => "point-log-%{+YYYY.MM.dd}"

routing => "%{type}"

}

}

if [fields][docType] == "mysqlslowlogs" {

elasticsearch {

hosts => ["http://localhost:9200"]

user => "elastic"

password => "qEnNfKNujqNrOPD9q5kb"

index => "mysql-slowlog-%{+YYYY.MM.dd}"

}

}

}

~~~

### 2.3. 修改logstash配置

修改文件`config/logstash.yml`把`http.host`改为以下内容

~~~

http.host: 0.0.0.0

~~~

### 2.4. 后台运行

~~~

nohup bin/logstash -f config/logstash.conf &

~~~

- springcloud

- springcloud的作用

- springboot服务提供者和消费者

- Eureka

- ribbon

- Feign

- feign在微服务中的使用

- feign充当http请求工具

- Hystrix 熔断器

- Zuul 路由网关

- Spring Cloud Config 分布式配置中心

- config介绍与配置

- Spring Cloud Config 配置实战

- Spring Cloud Bus

- gateway

- 概念讲解

- 实例

- GateWay

- 统一日志追踪

- 分布式锁

- 1.redis

- springcloud Alibaba

- 1. Nacos

- 1.1 安装

- 1.2 特性

- 1.3 实例

- 1. 整合nacos服务发现

- 2. 整合nacos配置功能

- 1.4 生产部署方案

- 环境隔离

- 原理讲解

- 1. 服务发现

- 2. sentinel

- 3. Seata事务

- CAP理论

- 3.1 安装

- 分布式协议

- 4.熔断和降级

- springcloud与alibba

- oauth

- 1. abstract

- 2. oauth2 in micro-service

- 微服务框架付费

- SkyWalking

- 介绍与相关资料

- APM系统简单对比(zipkin,pinpoint和skywalking)

- server安装部署

- agent安装

- 日志清理

- 统一日志中心

- docker安装部署

- 安装部署

- elasticsearch 7.x

- logstash 7.x

- kibana 7.x

- ES索引管理

- 定时清理数据

- index Lifecycle Management

- 没数据排查思路

- ELK自身组件监控

- 多租户方案

- 慢查询sql

- 日志审计

- 开发

- 登录认证

- 链路追踪

- elk

- Filebeat

- Filebeat基础

- Filebeat安装部署

- 多行消息Multiline

- how Filebeat works

- Logstash

- 安装

- rpm安装

- docker安装Logstash

- grok调试

- Grok语法调试

- Grok常用表达式

- 配置中常见判断

- filter提取器

- elasticsearch

- 安装

- rpm安装

- docker安装es

- 使用

- 概念

- 基础

- 中文分词

- 统计

- 排序

- 倒排与正排索引

- 自定义dynamic

- 练习

- nested object

- 父子关系模型

- 高亮

- 搜索提示

- kibana

- 安装

- docker安装

- rpm安装

- 整合

- 收集日志

- 慢sql

- 日志审计s

- 云

- 分布式架构

- 分布式锁

- Redis实现

- redisson

- 熔断和降级