# 使用 TensorFlow 的简单的 GAN

您可以按照 Jupyter 笔记本中的代码`ch-14a_SimpleGAN`。

为了使用 TensorFlow 构建 GAN,我们使用以下步骤构建三个网络,两个判别器模型和一个生成器模型:

1. 首先添加用于定义网络的超参数:

```py

# graph hyperparameters

g_learning_rate = 0.00001

d_learning_rate = 0.01

n_x = 784 # number of pixels in the MNIST image

# number of hidden layers for generator and discriminator

g_n_layers = 3

d_n_layers = 1

# neurons in each hidden layer

g_n_neurons = [256, 512, 1024]

d_n_neurons = [256]

# define parameter ditionary

d_params = {}

g_params = {}

activation = tf.nn.leaky_relu

w_initializer = tf.glorot_uniform_initializer

b_initializer = tf.zeros_initializer

```

1. 接下来,定义生成器网络:

```py

z_p = tf.placeholder(dtype=tf.float32, name='z_p',

shape=[None, n_z])

layer = z_p

# add generator network weights, biases and layers

with tf.variable_scope('g'):

for i in range(0, g_n_layers): w_name = 'w_{0:04d}'.format(i)

g_params[w_name] = tf.get_variable(

name=w_name,

shape=[n_z if i == 0 else g_n_neurons[i - 1],

g_n_neurons[i]],

initializer=w_initializer())

b_name = 'b_{0:04d}'.format(i)

g_params[b_name] = tf.get_variable(

name=b_name, shape=[g_n_neurons[i]],

initializer=b_initializer())

layer = activation(

tf.matmul(layer, g_params[w_name]) + g_params[b_name])

# output (logit) layer

i = g_n_layers

w_name = 'w_{0:04d}'.format(i)

g_params[w_name] = tf.get_variable(

name=w_name,

shape=[g_n_neurons[i - 1], n_x],

initializer=w_initializer())

b_name = 'b_{0:04d}'.format(i)

g_params[b_name] = tf.get_variable(

name=b_name, shape=[n_x], initializer=b_initializer())

g_logit = tf.matmul(layer, g_params[w_name]) + g_params[b_name]

g_model = tf.nn.tanh(g_logit)

```

1. 接下来,定义我们将构建的两个判别器网络的权重和偏差:

```py

with tf.variable_scope('d'):

for i in range(0, d_n_layers): w_name = 'w_{0:04d}'.format(i)

d_params[w_name] = tf.get_variable(

name=w_name,

shape=[n_x if i == 0 else d_n_neurons[i - 1],

d_n_neurons[i]],

initializer=w_initializer())

b_name = 'b_{0:04d}'.format(i)

d_params[b_name] = tf.get_variable(

name=b_name, shape=[d_n_neurons[i]],

initializer=b_initializer())

#output (logit) layer

i = d_n_layers

w_name = 'w_{0:04d}'.format(i)

d_params[w_name] = tf.get_variable(

name=w_name, shape=[d_n_neurons[i - 1], 1],

initializer=w_initializer())

b_name = 'b_{0:04d}'.format(i)

d_params[b_name] = tf.get_variable(

name=b_name, shape=[1], initializer=b_initializer())

```

1. 现在使用这些参数,构建将真实图像作为输入并输出分类的判别器:

```py

# define discriminator_real

# input real images

x_p = tf.placeholder(dtype=tf.float32, name='x_p',

shape=[None, n_x])

layer = x_p

with tf.variable_scope('d'):

for i in range(0, d_n_layers): w_name = 'w_{0:04d}'.format(i)

b_name = 'b_{0:04d}'.format(i)

layer = activation(

tf.matmul(layer, d_params[w_name]) + d_params[b_name])

layer = tf.nn.dropout(layer,0.7)

#output (logit) layer

i = d_n_layers

w_name = 'w_{0:04d}'.format(i)

b_name = 'b_{0:04d}'.format(i)

d_logit_real = tf.matmul(layer,

d_params[w_name]) + d_params[b_name]

d_model_real = tf.nn.sigmoid(d_logit_real)

```

1. 接下来,使用相同的参数构建另一个判别器网络,但提供生成器的输出作为输入:

```py

# define discriminator_fake

# input generated fake images

z = g_model

layer = z

with tf.variable_scope('d'):

for i in range(0, d_n_layers): w_name = 'w_{0:04d}'.format(i)

b_name = 'b_{0:04d}'.format(i)

layer = activation(

tf.matmul(layer, d_params[w_name]) + d_params[b_name])

layer = tf.nn.dropout(layer,0.7)

#output (logit) layer

i = d_n_layers

w_name = 'w_{0:04d}'.format(i)

b_name = 'b_{0:04d}'.format(i)

d_logit_fake = tf.matmul(layer,

d_params[w_name]) + d_params[b_name]

d_model_fake = tf.nn.sigmoid(d_logit_fake)

```

1. 现在我们已经建立了三个网络,它们之间的连接是使用损失,优化器和训练函数完成的。在训练生成器时,我们只训练生成器的参数,在训练判别器时,我们只训练判别器的参数。我们使用`var_list`参数将此指定给优化器的`minimize()`函数。以下是为两种网络定义损失,优化器和训练函数的完整代码:

```py

g_loss = -tf.reduce_mean(tf.log(d_model_fake))

d_loss = -tf.reduce_mean(tf.log(d_model_real) + tf.log(1 - d_model_fake))

g_optimizer = tf.train.AdamOptimizer(g_learning_rate)

d_optimizer = tf.train.GradientDescentOptimizer(d_learning_rate)

g_train_op = g_optimizer.minimize(g_loss,

var_list=list(g_params.values()))

d_train_op = d_optimizer.minimize(d_loss,

var_list=list(d_params.values()))

```

1. 现在我们已经定义了模型,我们必须训练模型。训练按照以下算法完成:

```py

For each epoch:

For each batch: get real images x_batch

generate noise z_batch

train discriminator using z_batch and x_batch

generate noise z_batch

train generator using z_batch

```

笔记本电脑的完整训练代码如下:

```py

n_epochs = 400

batch_size = 100

n_batches = int(mnist.train.num_examples / batch_size)

n_epochs_print = 50

with tf.Session() as tfs:

tfs.run(tf.global_variables_initializer())

for epoch in range(n_epochs):

epoch_d_loss = 0.0

epoch_g_loss = 0.0

for batch in range(n_batches):

x_batch, _ = mnist.train.next_batch(batch_size)

x_batch = norm(x_batch)

z_batch = np.random.uniform(-1.0,1.0,size=[batch_size,n_z])

feed_dict = {x_p: x_batch,z_p: z_batch}

_,batch_d_loss = tfs.run([d_train_op,d_loss],

feed_dict=feed_dict)

z_batch = np.random.uniform(-1.0,1.0,size=[batch_size,n_z])

feed_dict={z_p: z_batch}

_,batch_g_loss = tfs.run([g_train_op,g_loss],

feed_dict=feed_dict)

epoch_d_loss += batch_d_loss

epoch_g_loss += batch_g_loss

if epoch%n_epochs_print == 0:

average_d_loss = epoch_d_loss / n_batches

average_g_loss = epoch_g_loss / n_batches

print('epoch: {0:04d} d_loss = {1:0.6f} g_loss = {2:0.6f}'

.format(epoch,average_d_loss,average_g_loss))

# predict images using generator model trained

x_pred = tfs.run(g_model,feed_dict={z_p:z_test})

display_images(x_pred.reshape(-1,pixel_size,pixel_size))

```

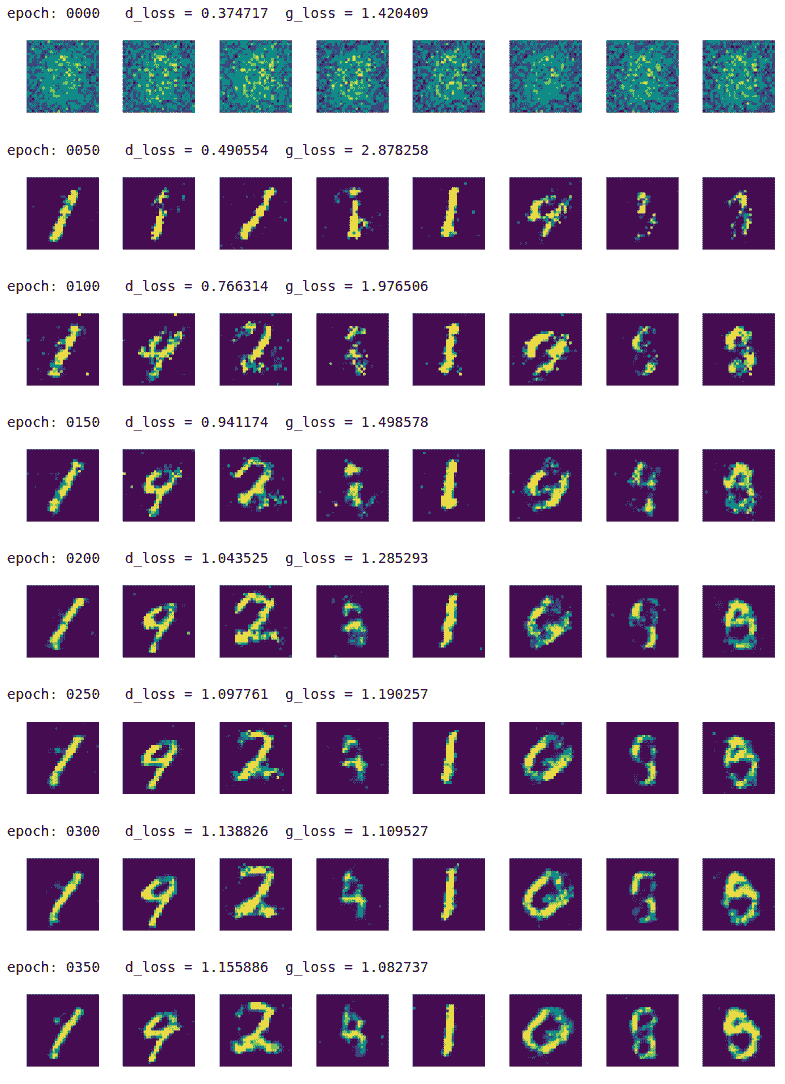

我们每 50 个周期印刷生成的图像:

正如我们所看到的那样,生成器在周期 0 中只产生噪声,但是在周期 350 中,它经过训练可以产生更好的手写数字形状。您可以尝试使用周期,正则化,网络架构和其他超参数进行试验,看看是否可以产生更快更好的结果。

- TensorFlow 101

- 什么是 TensorFlow?

- TensorFlow 核心

- 代码预热 - Hello TensorFlow

- 张量

- 常量

- 操作

- 占位符

- 从 Python 对象创建张量

- 变量

- 从库函数生成的张量

- 使用相同的值填充张量元素

- 用序列填充张量元素

- 使用随机分布填充张量元素

- 使用tf.get_variable()获取变量

- 数据流图或计算图

- 执行顺序和延迟加载

- 跨计算设备执行图 - CPU 和 GPU

- 将图节点放置在特定的计算设备上

- 简单放置

- 动态展示位置

- 软放置

- GPU 内存处理

- 多个图

- TensorBoard

- TensorBoard 最小的例子

- TensorBoard 详情

- 总结

- TensorFlow 的高级库

- TF Estimator - 以前的 TF 学习

- TF Slim

- TFLearn

- 创建 TFLearn 层

- TFLearn 核心层

- TFLearn 卷积层

- TFLearn 循环层

- TFLearn 正则化层

- TFLearn 嵌入层

- TFLearn 合并层

- TFLearn 估计层

- 创建 TFLearn 模型

- TFLearn 模型的类型

- 训练 TFLearn 模型

- 使用 TFLearn 模型

- PrettyTensor

- Sonnet

- 总结

- Keras 101

- 安装 Keras

- Keras 中的神经网络模型

- 在 Keras 建立模型的工作流程

- 创建 Keras 模型

- 用于创建 Keras 模型的顺序 API

- 用于创建 Keras 模型的函数式 API

- Keras 层

- Keras 核心层

- Keras 卷积层

- Keras 池化层

- Keras 本地连接层

- Keras 循环层

- Keras 嵌入层

- Keras 合并层

- Keras 高级激活层

- Keras 正则化层

- Keras 噪音层

- 将层添加到 Keras 模型

- 用于将层添加到 Keras 模型的顺序 API

- 用于向 Keras 模型添加层的函数式 API

- 编译 Keras 模型

- 训练 Keras 模型

- 使用 Keras 模型进行预测

- Keras 的附加模块

- MNIST 数据集的 Keras 序列模型示例

- 总结

- 使用 TensorFlow 进行经典机器学习

- 简单的线性回归

- 数据准备

- 构建一个简单的回归模型

- 定义输入,参数和其他变量

- 定义模型

- 定义损失函数

- 定义优化器函数

- 训练模型

- 使用训练的模型进行预测

- 多元回归

- 正则化回归

- 套索正则化

- 岭正则化

- ElasticNet 正则化

- 使用逻辑回归进行分类

- 二分类的逻辑回归

- 多类分类的逻辑回归

- 二分类

- 多类分类

- 总结

- 使用 TensorFlow 和 Keras 的神经网络和 MLP

- 感知机

- 多层感知机

- 用于图像分类的 MLP

- 用于 MNIST 分类的基于 TensorFlow 的 MLP

- 用于 MNIST 分类的基于 Keras 的 MLP

- 用于 MNIST 分类的基于 TFLearn 的 MLP

- 使用 TensorFlow,Keras 和 TFLearn 的 MLP 总结

- 用于时间序列回归的 MLP

- 总结

- 使用 TensorFlow 和 Keras 的 RNN

- 简单循环神经网络

- RNN 变种

- LSTM 网络

- GRU 网络

- TensorFlow RNN

- TensorFlow RNN 单元类

- TensorFlow RNN 模型构建类

- TensorFlow RNN 单元包装器类

- 适用于 RNN 的 Keras

- RNN 的应用领域

- 用于 MNIST 数据的 Keras 中的 RNN

- 总结

- 使用 TensorFlow 和 Keras 的时间序列数据的 RNN

- 航空公司乘客数据集

- 加载 airpass 数据集

- 可视化 airpass 数据集

- 使用 TensorFlow RNN 模型预处理数据集

- TensorFlow 中的简单 RNN

- TensorFlow 中的 LSTM

- TensorFlow 中的 GRU

- 使用 Keras RNN 模型预处理数据集

- 使用 Keras 的简单 RNN

- 使用 Keras 的 LSTM

- 使用 Keras 的 GRU

- 总结

- 使用 TensorFlow 和 Keras 的文本数据的 RNN

- 词向量表示

- 为 word2vec 模型准备数据

- 加载和准备 PTB 数据集

- 加载和准备 text8 数据集

- 准备小验证集

- 使用 TensorFlow 的 skip-gram 模型

- 使用 t-SNE 可视化单词嵌入

- keras 的 skip-gram 模型

- 使用 TensorFlow 和 Keras 中的 RNN 模型生成文本

- TensorFlow 中的 LSTM 文本生成

- Keras 中的 LSTM 文本生成

- 总结

- 使用 TensorFlow 和 Keras 的 CNN

- 理解卷积

- 了解池化

- CNN 架构模式 - LeNet

- 用于 MNIST 数据的 LeNet

- 使用 TensorFlow 的用于 MNIST 的 LeNet CNN

- 使用 Keras 的用于 MNIST 的 LeNet CNN

- 用于 CIFAR10 数据的 LeNet

- 使用 TensorFlow 的用于 CIFAR10 的 ConvNets

- 使用 Keras 的用于 CIFAR10 的 ConvNets

- 总结

- 使用 TensorFlow 和 Keras 的自编码器

- 自编码器类型

- TensorFlow 中的栈式自编码器

- Keras 中的栈式自编码器

- TensorFlow 中的去噪自编码器

- Keras 中的去噪自编码器

- TensorFlow 中的变分自编码器

- Keras 中的变分自编码器

- 总结

- TF 服务:生产中的 TensorFlow 模型

- 在 TensorFlow 中保存和恢复模型

- 使用保护程序类保存和恢复所有图变量

- 使用保护程序类保存和恢复所选变量

- 保存和恢复 Keras 模型

- TensorFlow 服务

- 安装 TF 服务

- 保存 TF 服务的模型

- 提供 TF 服务模型

- 在 Docker 容器中提供 TF 服务

- 安装 Docker

- 为 TF 服务构建 Docker 镜像

- 在 Docker 容器中提供模型

- Kubernetes 中的 TensorFlow 服务

- 安装 Kubernetes

- 将 Docker 镜像上传到 dockerhub

- 在 Kubernetes 部署

- 总结

- 迁移学习和预训练模型

- ImageNet 数据集

- 再训练或微调模型

- COCO 动物数据集和预处理图像

- TensorFlow 中的 VGG16

- 使用 TensorFlow 中预训练的 VGG16 进行图像分类

- TensorFlow 中的图像预处理,用于预训练的 VGG16

- 使用 TensorFlow 中的再训练的 VGG16 进行图像分类

- Keras 的 VGG16

- 使用 Keras 中预训练的 VGG16 进行图像分类

- 使用 Keras 中再训练的 VGG16 进行图像分类

- TensorFlow 中的 Inception v3

- 使用 TensorFlow 中的 Inception v3 进行图像分类

- 使用 TensorFlow 中的再训练的 Inception v3 进行图像分类

- 总结

- 深度强化学习

- OpenAI Gym 101

- 将简单的策略应用于 cartpole 游戏

- 强化学习 101

- Q 函数(在模型不可用时学习优化)

- RL 算法的探索与开发

- V 函数(模型可用时学习优化)

- 强化学习技巧

- 强化学习的朴素神经网络策略

- 实现 Q-Learning

- Q-Learning 的初始化和离散化

- 使用 Q-Table 进行 Q-Learning

- Q-Network 或深 Q 网络(DQN)的 Q-Learning

- 总结

- 生成性对抗网络

- 生成性对抗网络 101

- 建立和训练 GAN 的最佳实践

- 使用 TensorFlow 的简单的 GAN

- 使用 Keras 的简单的 GAN

- 使用 TensorFlow 和 Keras 的深度卷积 GAN

- 总结

- 使用 TensorFlow 集群的分布式模型

- 分布式执行策略

- TensorFlow 集群

- 定义集群规范

- 创建服务器实例

- 定义服务器和设备之间的参数和操作

- 定义并训练图以进行异步更新

- 定义并训练图以进行同步更新

- 总结

- 移动和嵌入式平台上的 TensorFlow 模型

- 移动平台上的 TensorFlow

- Android 应用中的 TF Mobile

- Android 上的 TF Mobile 演示

- iOS 应用中的 TF Mobile

- iOS 上的 TF Mobile 演示

- TensorFlow Lite

- Android 上的 TF Lite 演示

- iOS 上的 TF Lite 演示

- 总结

- R 中的 TensorFlow 和 Keras

- 在 R 中安装 TensorFlow 和 Keras 软件包

- R 中的 TF 核心 API

- R 中的 TF 估计器 API

- R 中的 Keras API

- R 中的 TensorBoard

- R 中的 tfruns 包

- 总结

- 调试 TensorFlow 模型

- 使用tf.Session.run()获取张量值

- 使用tf.Print()打印张量值

- 用tf.Assert()断言条件

- 使用 TensorFlow 调试器(tfdbg)进行调试

- 总结

- 张量处理单元